I have organized the code, and below is a simplified portion of it. I have annotated the part that is causing the error.

This is the code for the training section:

os.environ['KMP_DUPLICATE_LIB_OK'] = 'TRUE'

'''

Due to space constraints, only the relevant key code for the training part is retained here.

'''

ac_train = AC_RPPG(video_length=video_length, image_size=img_size, source_file=ac_train_source_file,

base_dir=ac_base_dir, target_fps=target_fps, bvp_length=bvp_length)

train_dataset = MergedSet([ac_train])

# dataloader

train_loader = DataLoader(train_dataset, batch_size, shuffle=True, num_workers=0)

model = RPPGSFormer(num_frames=video_length * target_fps, signal_length=bvp_length)

model = model.to(device)

for epoch in range(epochs):

train_eval(epoch)

def train_eval(epoch):

global eval_times, best_model, min_eval_loss

epoch_loss = 0.

start_time = time.time()

for batch_id, (video, bvp, hr, fps, bvp_sampling_rate) in enumerate(train_loader):

# to device

video = video.float().to(device)

bvp = bvp.float().to(device)

# predict

# The code encountered an error at this point in execution,

# and the error message describes the specific issue mentioned in this problem.

rppg = model(video)

This is the structural code related to the model:

class RPPGSFormer(nn.Module):

def __init__(self, num_frames=96, signal_length=96, pretrained=True, **kwargs):

super().__init__()

self.embed_dim = 192

self.signal_length = signal_length

self.vit = VisionTransformer(patch_size=16, embed_dim=192, depth=12, num_heads=3, mlp_ratio=4,

qkv_bias=True, norm_layer=partial(nn.LayerNorm, eps=1e-6), **kwargs)

if pretrained:

path = r'E:\A_projects\Python\expTest\saved_models\deit_tiny_patch16_224-a1311bcf.pth'

checkpoint = torch.load(path)

self.vit.load_state_dict(checkpoint["model"])

self.vit.head = nn.Identity()

self.temporal_encoder = TemporalEncoder(clip_length=num_frames, embed_dim=self.embed_dim, n_layers=6)

# Omit part of the code...

def forward(self, x):

'''

x.shape == [B, C, T, H, W]

'''

B, C, T, H, W = x.shape

x = x.permute(0, 2, 1, 3, 4)

x = x.reshape(B * T, C, H, W) # [B*T, 3, H, W]

x = self.vit(x) # [B*T, 192]

features = self.temporal_encoder(x)

rppg = self.head(features)

return rppg

def trunc_normal_(x, mean=0., std=1.):

return x.normal_().fmod_(2).mul_(std).add_(mean)

class TemporalEncoder(nn.Module):

def __init__(self, clip_length=None, embed_dim=2048, n_layers=6):

super(TemporalEncoder, self).__init__()

self.clip_length = clip_length

enc_layer = nn.TransformerEncoderLayer(d_model=embed_dim, nhead=8)

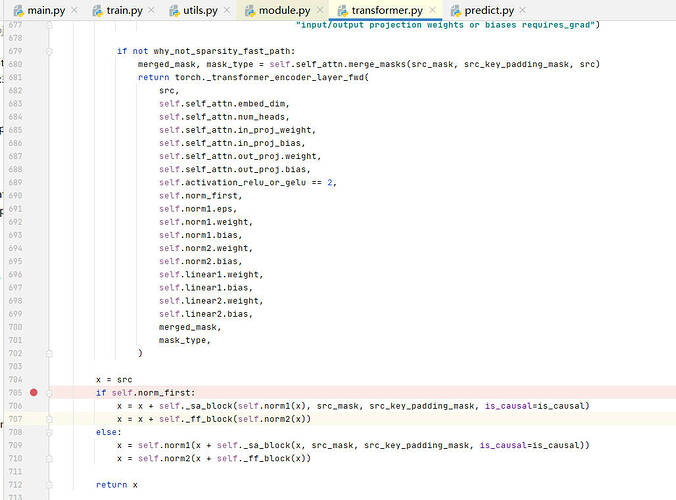

# The error can be traced back to this point.

self.transformer_enc = nn.TransformerEncoder(enc_layer, num_layers=n_layers, norm=nn.LayerNorm(embed_dim))

# ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑ ↑

self.cls_token = nn.Parameter(torch.zeros(1, 1, embed_dim))

self.pos_embed = nn.Parameter(torch.zeros(1, clip_length + 1, embed_dim))

with torch.no_grad():

trunc_normal_(self.pos_embed, std=.02)

trunc_normal_(self.cls_token, std=.02)

self.apply(self._init_weights)

def forward(self, x):

batch_size = x.shape[0] // self.clip_length

x = x.reshape((batch_size, self.clip_length, -1))

cls_tokens = self.cls_token.expand(batch_size, -1, -1)

x = torch.cat((cls_tokens, x), dim=1) # [B, T+1, D]

x = x + self.pos_embed

x.transpose_(1, 0)

# The error can be traced back to this point.

token = self.transformer_enc(x)

return token[0]