Hi all, I am having an issue with the division of my training set in batches using torch.utils.data.DataLoader.

I reported the error message at the end of the thread and attached it as an image.

I am working with a torchvision.datasets.DatasetFolder of numpy arrays of dimension (128,128,3) to train a Generative Adversarial Network with progressive growing training starting from 4x4 resolution. These are the functions I am using to create the dataset and the loader

def npy_loader(path):

sample = np.load(path, allow_pickle=True)

return sample

def get_loader(image_size):

transform = transforms.Compose(

[

CustomNormalization(), # normalization of the inputs

NumpyToTensor3D(), # output tensor is in the shape (C x H x W) so it works with transforms.Resize()

transforms.Resize((image_size, image_size)),

]

)

batch_size = 64

dataset = datasets.DatasetFolder(root=config.DATASET, loader=npy_loader, extensions=‘.npy’, transform=transform)

loader = DataLoader(

dataset,

batch_size=batch_size,

num_workers=4,

pin_memory=True,

)

return loader, dataset

The error happens in the first epoch when arriving at the batch where there is the stack problem because of a different shape in an example. The loop through the batches is defined in the following way:

loop = tqdm(loader, leave=True)

for batch_idx, (real, _) in enumerate(loop):

As you can see there are 2 custom-made transformation classes, I tried running the code without them and I got the same error, so apparently, they are not the problem. I also tried running the code without the transforms.Resize() class and I still got the error.

I also tried to change the batch size to 32 and 128 but still got the error and always at the same example of the training set (n. 403756). I have checked the training set several times and the original shape of each file is correct.

If I set the batch size to 1 the error does not happen anymore, so I am thinking that probably there is some mistake in the definition of the DataLoader so that the stacking of the examples in batches fails because of dimension.

Also, in this case, I am running the training with approximately 1.600.000 examples in the training set but if I reduce the training set to a smaller sample (around 70.000 examples) the training is performed well and I don’t get the error. Could the dataset dimension be a problem? It does not seem possible to me.

Has anyone else experienced a similar problem? Do you have any suggestions?

Thanks in advance for your help

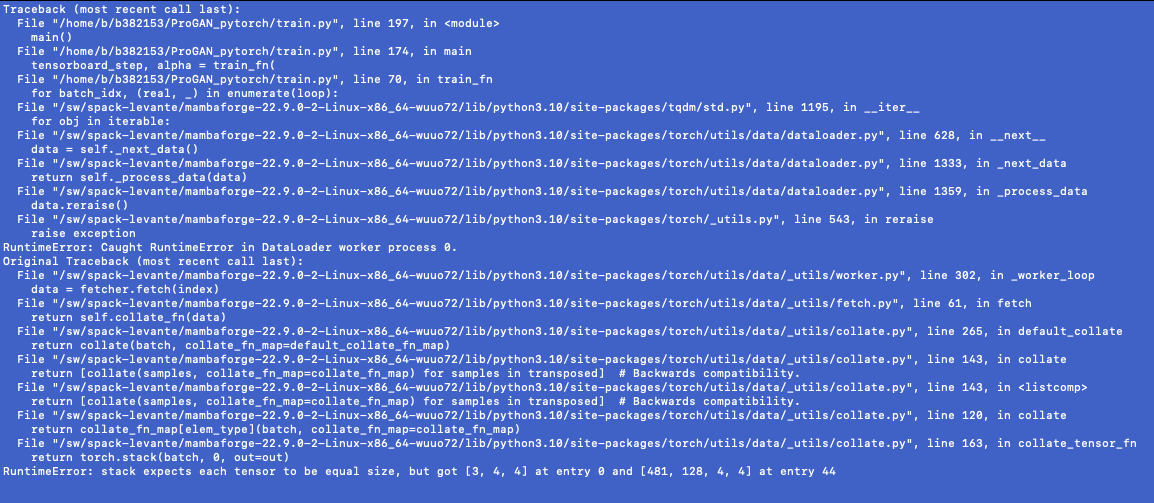

Traceback (most recent call last):

File “/home/b/b382153/PrOGAN_pytorch/train.py”, line 197, in

main ()

File " /home/b/b382153/ProGAN_pytorch/train.py", line 174, in main

tensorboard_step, alpha = train_fn(

File “/home/b/b382153/PrOGAN_pytorch/train.py”, line 70, in train_fn

for batch_idx, (real, -) in enumerate (loop):

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/tqdm/std.py”,line1195,in iter. for obj in iterable:

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-x86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/dataloader.py”,line628,in

-_next

data = self._next_data()

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/dataloader.py”,line1333,in_next_data return self._process_data(data)

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/dataloader.py”,line1359,in_process_data data.reraise ()

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/_utils.py”,line543,inreraise raise exception

RuntimeError: Caught RuntimeError in DataLoader worker process 0.

Original Traceback (most recent call last):

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/1ib/python3.10/site-packages/torch/utils/data/_utils/worker.py”,line302,in_worker_100p

data = fetcher. fetch (index)

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/_utils/fetch.py”,line61,infetch return self.collate_fn (data)

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/_utils/collate.py”,line265,indefault_collate return collate (batch, collate_fn_map=default_collate_fn_map)

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/_utils/collate.py”,line143,incollate return [collate(samples, collate_fn_map=collate_fn_map) for samples in transposed] # Backwards compatibility.

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/_utils/collate•py”,line143,in

return [collate(samples, collate_fn_map=collate_fn_map) for samples in transposed] # Backwards compatibility.

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-×86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/_utils/collate.py”,line120,incollate return collate_fn_map[elem_type](batch, collate_fn_map=collate_fn_map)

File “/sw/spack-levante/mambaforge-22.9.0-2-Linux-x86_64-wuuo72/lib/python3.10/site-packages/torch/utils/data/_utils/collate.py”,line163,incollate_tensor_fn return torch. stack(batch, 0, out=out)

RuntimeError: stack expects each tensor to be equal size, but got [3, 4, 4] at entry 0 and [481, 128, 4, 4] at entry 44