Hello,

I have used nn.DataPrallel to use 2 gpus while training.

This is how my model code looks (roughly)

class Model(nn.Module):

def forward(self, inputs):

logger.info(f"shape of unique values in inputs = {torch.unique(inputs).shape}: {inputs.device}")

logger.info(f"mean of inputs = {torch.mean(inputs)}: {inputs.device}")

# some calculation using conv and linear layers

# after this I get two variables loss and per

# both are size of 1. loss is output of loss function (mean squared error)

logger.info(f"per: {per}: {per.device}")

logger.info(f"loss: {loss}: {loss.device}")

return loss, per

My training loop looks like this

model = Model()

model = nn.DataParallel(model, device_ids=[0, 1])

model.to("cuda:0")

optimizer = Adam(model.parameters())

for data in dataloader:

data = data.to("cuda:0")

loss, per = model(data)

logger.info(f"per tensor: {per}")

logger.info(f"loss tensor: {loss}")

# compute the average loss

x = loss.mean()

# do backward

x.backward()

optimizer.step()

So I ran this code in 2 different servers (both have same configuration and versions. The details are given below).

The logs for 1st iteration in 1st and 2nd server is given below (# are some of my comments)

Server 1 LOG

shape of unique values in inputs = torch.size([366633886]): cuda:0

mean of inputs = 0.00390: cuda:0

per: 1.0: cuda:0

loss: 0.18566: cuda:0

shape of unique values in inputs = torch.size([1]): cuda:1

mean of inputs = 0: cuda:1 # observe that for cuda:1 num(unique) = 1 and mean = 0

per: 1.0: cuda:1

loss: 0: cuda:1

per tensor: tensor([1., 0.], device='cuda:0') # the output has to be [1.0, 1.0] I guess

loss tensor: tensor([0.1857, 0.0000], device='cuda:0')

Server 2 LOG

shape of unique values in inputs = torch.size([35358244]): cuda:0

mean of inputs = 0.0002569: cuda:0

per: 1.0: cuda:0

loss: 0.1797265: cuda:0

shape of unique values in inputs = torch.size([35318931]): cuda:1

mean of inputs = 0.0002706056: cuda:1

per: 1.0: cuda:1

loss: 0.179730564: cuda:1

per tensor: tensor([1., 1.], device='cuda:0')

loss tensor: tensor([0.1797, 0.1797], device='cuda:0')

As you can see in server 1, I am receving inputs as all zero in device = cuda:1 (because number of unique value is 1 and mean is 0) but everything seems working fine in server 2

SETUP

Both servers (operation system is Windows Server 16) uses the same data and same code. The version of torch and torchvision for both servers is (both were installed by downloading the wheel file and then using pip)

torch : 1.8.1+cu111-cp36-cp36m-win_amd64.whl

torchvision: 0.9.1+cu111-cp36-cp36m-win_amd64

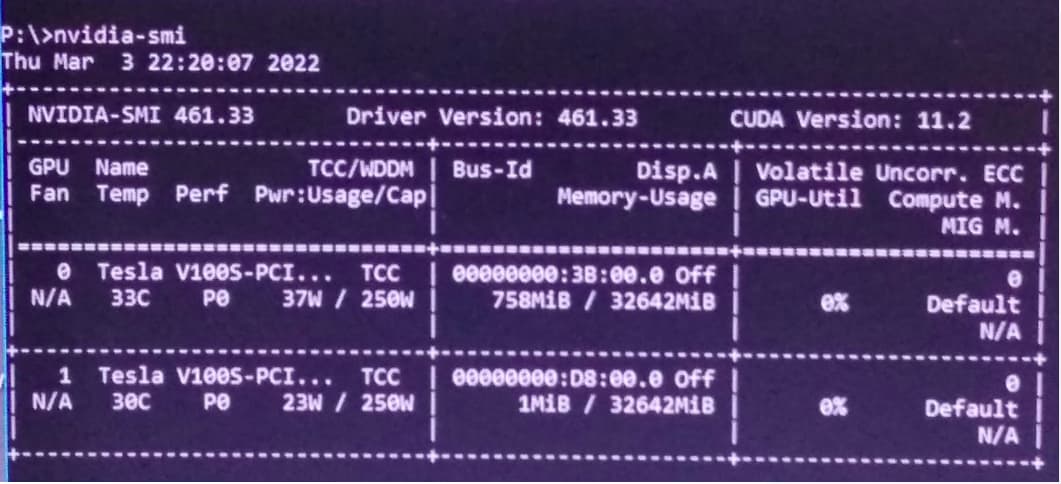

The GPU details are given below (both are same for servers)

The only difference between two servers is that is in server 1, three versions of CUDA (10.0, 10.1 and 11.2) are installed but in server 2 only 10.2 CUDA version is installed.

But according to these posts (1 and 2) I think it doesn’t matter what versions of CUDA are installed in the system as we are using wheel file to install torch which will come with pre-build CUDA and CUDNN.

Can I know why in server 1 the output is wrong ?

Note : Apology for a very long post as I did work on this sometime and wanted to share my thoughts as well. Also I understand the forward computation and training loop is minimal as I dont know if I can share the organization code here. If you need any more details about the server configurations, environment variables in system I can provide them as well

Thanks !!