Hi,

I am trying to incorporate a conv1d() layer into a network I am using for classification.

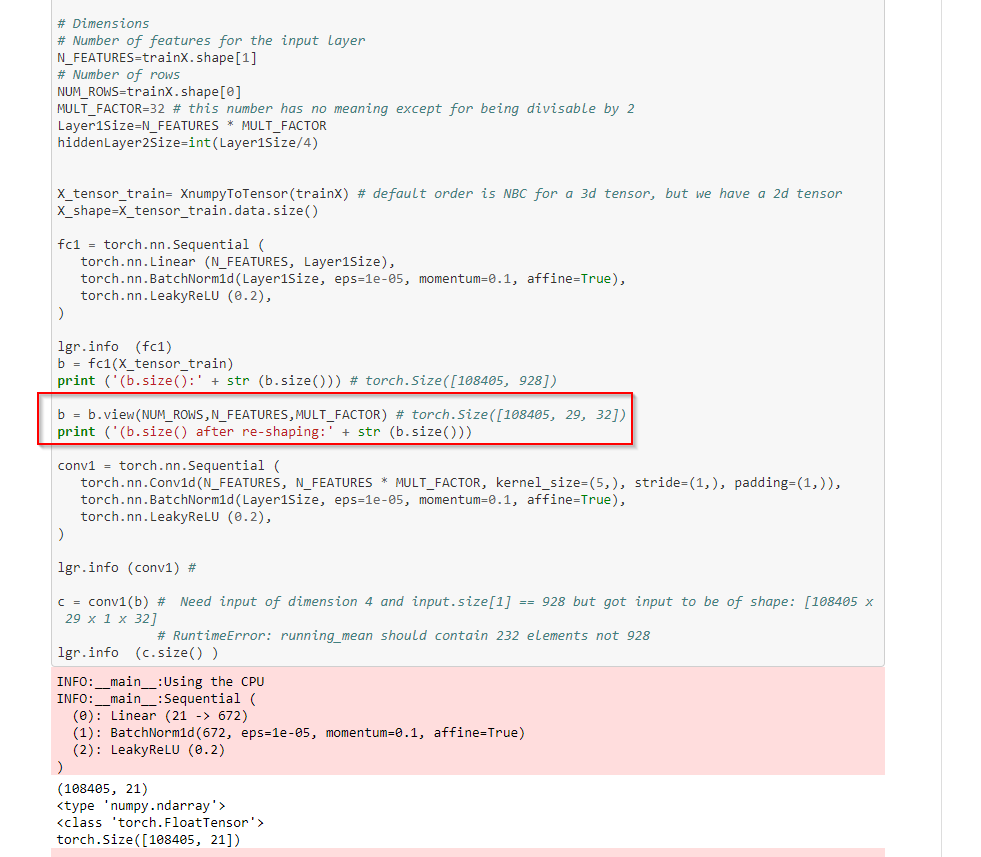

When I use it like so, two different layers and re-shaping using view this works:

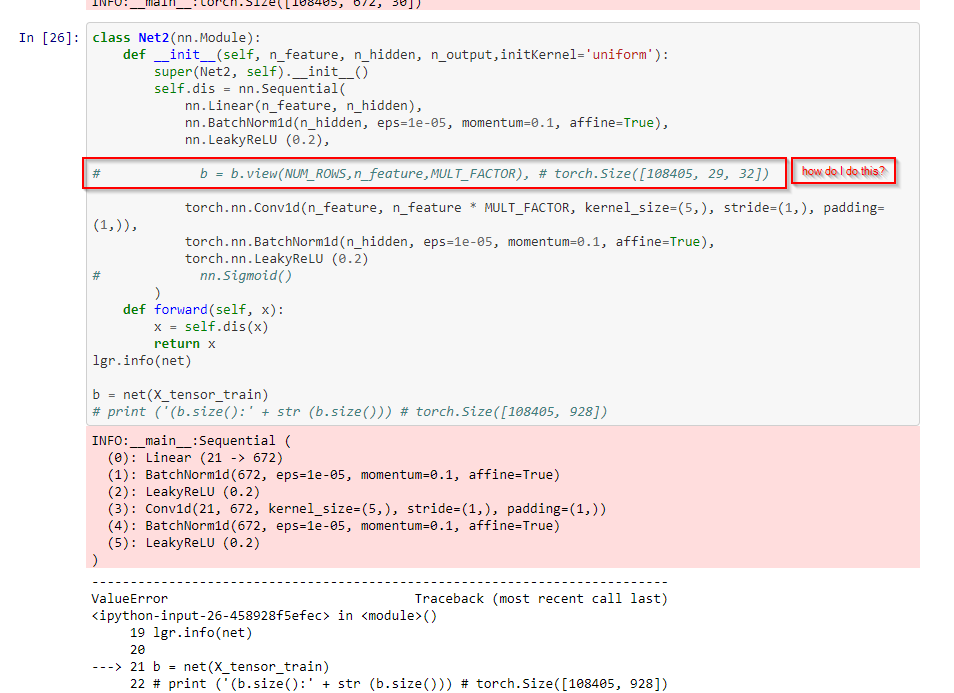

However, if I incorporate the layers into a single Net (see below), then I am not sure how to re-shape inside the Sequential layer itself. Maybe a transpose? not sure how to do it:

Full code is available here:

https://github.com/QuantScientist/Deep-Learning-Boot-Camp/blob/master/day%2002%20PyTORCH%20and%20PyCUDA/PyTorch/31-PyTorch-using-CONV1D-on-one-dimensional-data.ipynb

Many thanks

EDIT:

Fixed like so:

class Net2(nn.Module):

def __init__(self, n_feature, n_hidden, n_output):

super(Net2, self).__init__()

self.n_feature=n_feature

self.l1 = nn.Sequential(

torch.nn.Linear(n_feature, n_hidden),

torch.nn.BatchNorm1d(n_hidden, eps=1e-05, momentum=0.1, affine=True),

torch.nn.LeakyReLU (0.2)

)

self.c1= nn.Sequential(

torch.nn.Conv1d(n_feature, n_feature * MULT_FACTOR, kernel_size=(5,), stride=(1,), padding=(1,)),

torch.nn.BatchNorm1d(n_hidden, eps=1e-05, momentum=0.1, affine=True),

torch.nn.LeakyReLU (0.2)

)

def forward(self, x):

print ('(x.size():' + str (x.size()))

x=self.l1(x)

print ('(x.size():' + str (x.size()))

# for CNN

x = x.view(NUM_ROWS,self.n_feature,MULT_FACTOR)

print ('(x.size():' + str (x.size()))

x=self.c1(x)

print ('(x.size():' + str (x.size()))

x=F.sigmoid (x)

return x

net = Net2(n_feature=N_FEATURES, n_hidden=Layer1Size, n_output=1) # define the network

lgr.info(net)

b = net(X_tensor_train)

print ('(b.size():' + str (b.size())) # torch.Size([108405, 928])

Reference (https://gist.github.com/spro/c87cc706625b8a54e604fb1024106556)

1 Like