Hello,

I am confused by an error I am getting while implementing an object-detection dataloader. This dataloaders returns an image (as a tensor) and a dictionnary, containing a tensor of bounding boxes, and a tensor of labels. I wrote the following code (inspired from TorchVision Object Detection Finetuning Tutorial — PyTorch Tutorials 1.10.0+cu102 documentation):

class RCNNDataset(Dataset):

def __init__(self, root_dir: str,

transforms = Normalize(mean = (0.92,), std = (0.15,)),

image_size:tuple=(1008,888)):

self.root_dir = root_dir

self.label_dir = os.path.join(self.root_dir, "labels")

self.image_dir = os.path.join(self.root_dir, "images")

self.images = sorted(os.listdir(self.image_dir))

self.labels = sorted(os.listdir(self.label_dir))

self.to_tensor = ToTensor()

self.transforms = transforms

self.image_size = image_size

def __len__(self):

return len(self.label_dir)

def __getitem__(self, idx):

image = Image.open(os.path.join(self.image_dir, self.images[idx]))

if image.size != self.image_size:

image = image.resize(size=self.image_size)

image = self.to_tensor(image)

if self.transforms is not None:

image = self.transforms(image)

label_array = np.loadtxt(os.path.join(self.label_dir, self.labels[idx]))

targets = {"labels": torch.from_numpy(label_array[:,-1]),

"boxes": torch.from_numpy(label_array[:,0:4])}

return image, targets

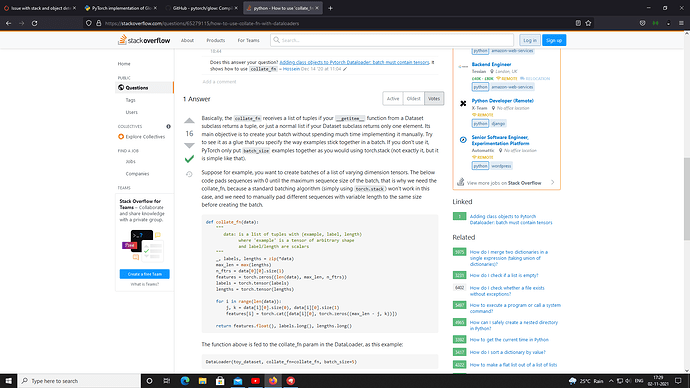

When I try to iterate through this dataloader (i.e doing images, targets = next(iter(dataloader)), I get the following error:

RuntimeError: stack expects each tensor to be equal size, but got [47] at entry 0 and [46] at entry 1

As I understand it, the stack function is expecting my tensors to always have the same shape for every element of the batch (so the same number of objects to detect in an image) ? I am very confused by this.

I would be grateful towards anybody that could help me understand this