Hi all

First post, I am a novice getting my feet wet with vision applications.

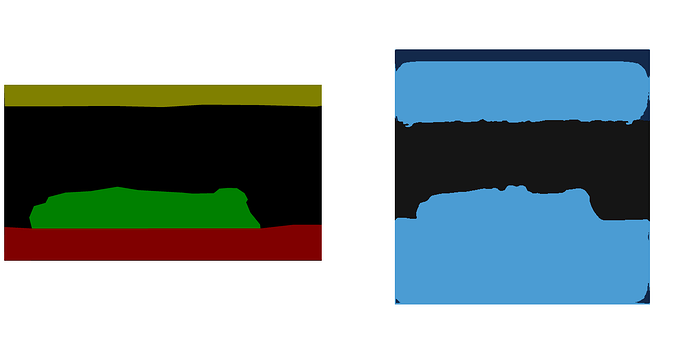

I am trying to do a multi class image segmentation using UNET. I have a well controlled scene with 4 classes including background. My images are very large and can vary in size so I shrink them down to 512x512 squares before processing as part of my preprocessing transforms. (I think this is where things go wrong.) I do preserve the aspect ratio and so I pad the shorter side.

I label the images in labelme and convert the json to png formant. I load them in pytorch with Pillow including the convert L option even though they are rgb with the colors.

I suspect the small values float values in my label file is the reason why I get a prediction of everything being background. I am not sure how to do this properly.

How should I be doing this? Any help or advice would be much appreciated.

My mask transforms is the following

def pad_mask_with_aspect(x):

c,h,w = x.shape

h_new = int(IMG_SIZE*h/w)

pad_amount = int((IMG_SIZE-h_new)//2)

square_image = transforms.Compose([

transforms.Resize(size=(h_new,IMG_SIZE)),

transforms.Pad((0,pad_amount),fill=255, padding_mode='constant'),

transforms.Resize(size=(IMG_SIZE,IMG_SIZE))

])

return square_image(x)

mask_transform = transforms.Compose([

transforms.ToTensor(),

transforms.Lambda(lambda x : pad_mask_with_aspect(x)),

])

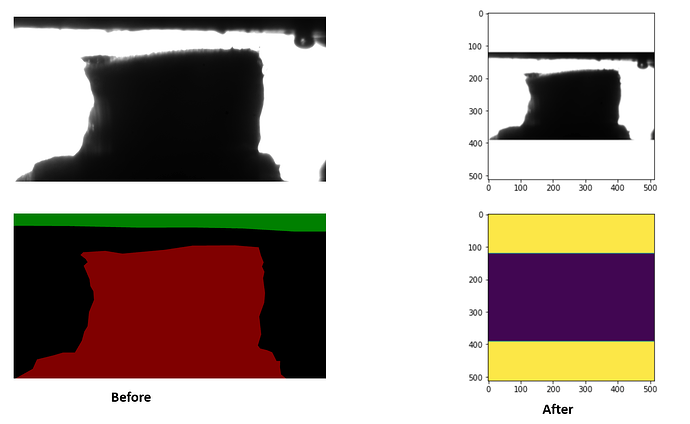

Before

listing out the unique values of the tensors yields a way to long list

[0.00000000e+00 1.61301869e-05 3.39231308e-04 3.39586608e-04

5.01137169e-04 1.69777311e-03 4.07475512e-03 4.12932783e-03

5.09344367e-03 6.40318636e-03 6.40318682e-03 8.17018375e-03

8.17018468e-03 1.09038642e-02 1.22726383e-02 1.22867087e-02

1.24152694e-02 1.35340076e-02 1.45362820e-02 1.80453453e-02

2.06966624e-02 2.06966642e-02 2.07012109e-02 2.08105519e-02

2.16495525e-02 2.45127957e-02 2.45127976e-02 2.61451844e-02

2.67280024e-02 2.79592983e-02 2.85708942e-02 2.86376886e-02

2.89735086e-02 2.89735105e-02 2.89780572e-02 2.91824918e-02

3.04137859e-02 3.17726135e-02 3.31801474e-02 3.32595184e-02

3.42347920e-02 3.42982486e-02 3.45858186e-02 3.51697020e-02

3.72549035e-02 3.80758382e-02 3.85971367e-02 4.07008678e-02

4.24938761e-02 4.36097533e-02 4.37975042e-02 4.47742157e-02

4.48223054e-02 4.49678339e-02 4.51908112e-02 4.59865220e-02

4.71507385e-02 5.07869944e-02 5.11114448e-02 5.27576469e-02

5.30091301e-02 5.35032935e-02 5.38131446e-02 5.53002469e-02

5.64644635e-02 5.71890436e-02 5.71890473e-02 5.87928928e-02

5.87928966e-02 5.99571094e-02 6.02331720e-02 6.11213259e-02

6.11213297e-02 6.20854422e-02 6.20899908e-02 6.51400909e-02

6.98983520e-02 7.03622922e-02 7.15992674e-02 7.17801824e-02

7.26206154e-02 7.27634802e-02 7.27634877e-02 7.39277005e-02

7.43655786e-02 7.86391348e-02 7.86482319e-02 8.00074935e-02

8.07626992e-02 8.23494643e-02 8.32414255e-02 8.50510970e-02

8.55698586e-02 8.61051381e-02 8.66542757e-02 8.69159847e-02

8.69250745e-02 8.69250819e-02 8.98697823e-02 8.98697898e-02

9.12535116e-02 9.28289369e-02 9.52064693e-02 9.52561796e-02

9.60477963e-02 9.60478038e-02 9.72120166e-02 9.95404422e-02

1.00704663e-01 1.03483319e-01 1.03487864e-01 1.06210150e-01

1.06210157e-01 1.08773753e-01 1.10975251e-01 1.11764707e-01

1.12346813e-01 1.13511041e-01 1.13782331e-01 1.14675246e-01

1.15299284e-01 1.17408089e-01 1.20041549e-01 1.20041557e-01

1.22809075e-01 1.26317412e-01 1.27481624e-01 1.28322944e-01

1.28322959e-01 1.28645837e-01 1.29592612e-01 1.29631117e-01

1.30825192e-01 1.35193124e-01 1.36485681e-01 1.36599794e-01

1.38196826e-01 1.38525963e-01 1.38888642e-01 1.38888657e-01

1.40287995e-01 1.40369043e-01 1.41452208e-01 1.41452223e-01

1.42616421e-01 1.44184917e-01 1.44881189e-01 1.45017907e-01

1.45964295e-01 1.46287143e-01 1.49019599e-01 1.49019614e-01

1.49019629e-01 1.52260914e-01 1.87907502e-01 1.87907517e-01

2.18003228e-01 2.20588237e-01 2.20588252e-01 2.36928612e-01

2.36928627e-01 2.69609332e-01 2.69609362e-01 2.93064505e-01

2.94117630e-01 2.94117659e-01 2.94117689e-01 2.55000000e+02]