Hi folks, I’m trying to understand some aspects of reproducibility and it seems that I might have not understood something properly.

I have a toy multilayer perceptron and every time I train and test it I get different results regarding test accuracy.

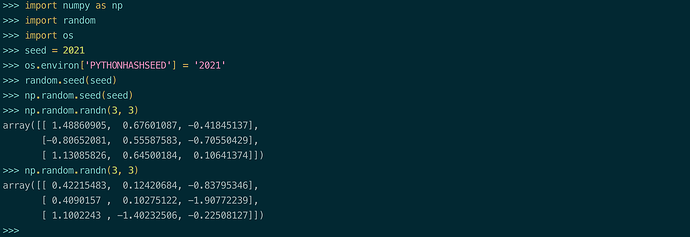

I’ve seeded all possible sources of randomness I could think of:

seed = 2021

os.environ['PYTHONHASHSEED'] = '2021'

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.backends.cudnn.benchmarks = False

torch.backends.cudnn.deterministic = True

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

torch.set_deterministic(True)

But I still observe this discrepancy in accuracy every time a re-train and test the model.

I suspect that has to do with the fact that every time the model is constructed the weights are randomly initialised, hence, the different results in accuracy.

It seems like none of the above seeds fixes the weights of network for reproducibility, am I understanding this wrong or did I miss sth crucial regarding reproducibility. (And yes I’ve read the docs about reproducibility)