Hi, I want to train a Resnet with my own dataset so I use transfer learning and change the fully connencted layer to change the number of classes. My dataset is about 13k images and it’s very different from original dataset with Resnet was trained.

This is the code I use run to use pre trained Resnet to extract features from my own dataset.

n_epoch = 300

batchsize = 32

lr = 1e-4

num_classes = 5

device = torch.device('cuda' if cuda else 'cpu')

model = torchvision.models.resnet34(pretrained=True)

for param in model.parameters():

param.requires_grad = False

num_ftrs = model.fc.in_features

model.fc = nn.Linear(num_ftrs, num_classes)

if cuda:

model.to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr = lr, weight_decay=1e-4, betas=(0.5, 0.999))

train_dataset = LoadDataset(dir, transform=transform)

train_dataloader = DataLoader(train_dataset, batch_size=batchsize, shuffle=True, num_workers=n_cpu)

val_dataset = LoadDataset(dir, transform=transform, split='val')

val_dataloader = DataLoader(val_dataset, batch_size=batchsize, shuffle=True, num_workers=n_cpu )

dataloader = {'train': train_dataloader, 'val': val_dataloader}

train_and_evaluate(model, optimizer, criterion, dataloader, device, n_epoch, lr)

Train and evaluation code

def train_and_evaluate(model, optimizer, criterion, dataloader, device, num_epoch=3, lr = 0.001):

for epoch in range(num_epoch):

print('Epoch {}/{} | lr = {}'.format(epoch + 1, num_epoch, lr))

# lr_scheduler.step()

for phase in ['train', 'val']:

if phase == 'train':

model.train()

else:

model.eval()

running_loss_train = RunningMetric()

running_acc_train = RunningMetric()

running_loss_val = RunningMetric()

running_acc_val = RunningMetric()

for lateral, medial, target, file_name in dataloader[phase]:

lateral, medial, target = lateral.to(device), medial.to(device), target.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'train'):

lateral_output, medial_output = model(lateral), model(medial)

_, lateral_preds = torch.max(lateral_output, 1)

_, medial_preds = torch.max(medial_output, 1)

acc_l = torch.sum(lateral_preds == target).float()

acc_m = torch.sum(medial_preds == target).float()

new = torch.cat([lateral_output, medial_output], 1)

loss = criterion(new, target)

if phase == 'train':

loss.backward()

optimizer.step()

batch_size = lateral.size()[0]

running_loss_train.update(loss.item() * batch_size, batch_size)

running_acc_train.update((acc_l + acc_m), batch_size*2)

batch_size = lateral.size()[0]

running_loss_val.update(loss.item() * batch_size, batch_size)

running_acc_val.update((acc_l + acc_m), batch_size*2)

if phase == 'train':

acc_train.append(round(running_acc_train().item(), 4))

loss_train.append(round(running_loss_train(), 4))

else:

acc_val.append(round(running_acc_val().item(), 4))

loss_val.append(round(running_loss_val(), 4))

print("Train | Acc = {} - Loss = {}".format(acc_train[-1], loss_train[-1]))

print("Val | Acc = {} - Loss = {}".format(acc_val[-1], loss_val[-1]))

return model

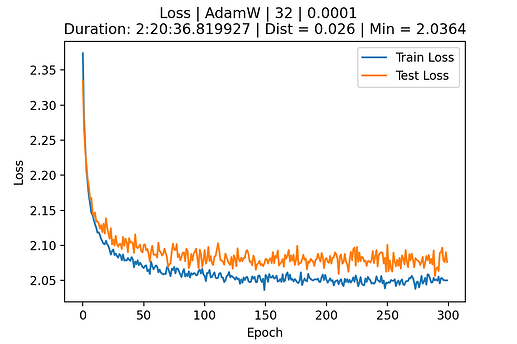

I trained the model but the loss and the accuracy got stuck.

How can I improve my results?

Maybe the transfer learning from the Resnet isn’t working or I should from previous layers of the model?

Any advice will be recieved, thanks for the help.