transform=transforms.Compose([transforms.RandomCrop(224),transforms.ToTensor(),transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))])

batch_size=20

train_data=datasets.ImageFolder(root="/data/dog_images/train",transform=transform)

test_data=datasets.ImageFolder(root="/data/dog_images/test",transform=transform)

valid_data=datasets.ImageFolder(root="/data/dog_images/valid",transform=transform)

train_list=np.arange(0,len(train_data))

print(train_list)

test_list=np.arange(0,len(test_data))

valid_list=np.arange(0,len(valid_data))

train_sampler = SubsetRandomSampler(train_list)

valid_sampler = SubsetRandomSampler(valid_list)

test_sampler=SubsetRandomSampler(test_list)

train_loader=torch.utils.data.DataLoader(dataset=train_data,batch_size=batch_size,sampler=train_sampler)

valid_loader=torch.utils.data.DataLoader(dataset=valid_data,batch_size=batch_size,sampler=valid_sampler)

test_loader=torch.utils.data.DataLoader(dataset=test_data,batch_size=batch_size,sampler=test_sampler)

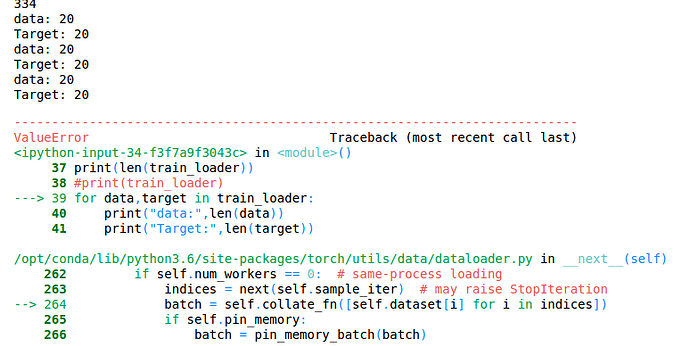

print(len(train_loader)) #334

for data,target in train_loader:

print("data:",len(data))

print("Target:",len(target))

Output:

Error message: ValueError: empty range for randrange() (0,-3, -3)

Note:This time the control iterated over the trainloader twice and then showed an error,but the number of iterations after which the error is produced is varying everytime.

Where am I going wrong?