Hi i’m training the CNN model on my new, second local computer(i.e, i have two computer which have same invironment of software and hardware), but the iteration time is much, much longer than first computer (at least three times longer than first computer)

and i found that interval(?) of using CPU and GPU(CUDA) is much longer in this second computer, and i don’t know why this happened. (and…does this situation have anythig to do with that Torch’s DataLoader’s num_workers or pin_memory?, or is this problem is because of the learning rate? i changed learning rate : 1e-4, batch size : 32 to learning rate : 1.25e-5, batch size : 4 in second computer, and nothing is changed without this parameter)

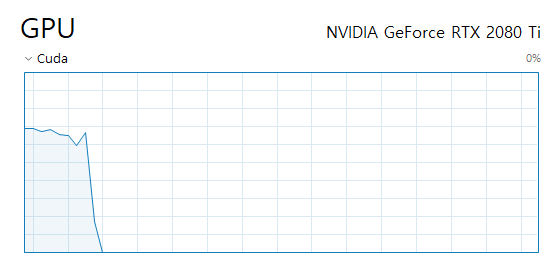

(in here, Once the GPU has been used, it has not been used for a long time since )

(like this…) ![]()

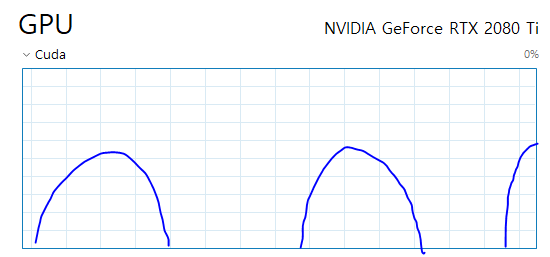

I mean, at my first computer, the usage on the CPU remained almost the same, and the usage graph on the GPU came out quite frequently(like below picture), like attached photo of below (as attached above, the usage graph appears once and does not appear at all for a long time)

does anyone have the solution of this situation? or is this situation is common situation?

(additionally, total number of train image is 2600, validation image is 399)

i’m sorry for these stupid question…and Thanks for all your response ![]()