Hello

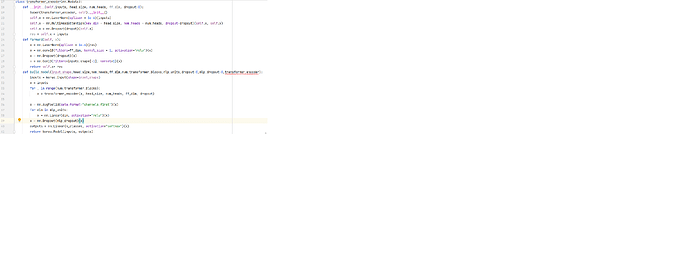

keras code is given below:

from tensorflow import keras

from tensorflow.keras import layers

def transformer_encoder(inputs, head_size, num_heads, ff_dim, dropout=0):

# Normalization and Attention

x = layers.LayerNormalization(epsilon=1e-6)(inputs)

x = layers.MultiHeadAttention(

key_dim=head_size, num_heads=num_heads, dropout=dropout

)(x, x)

x = layers.Dropout(dropout)(x)

res = x + inputs

# Feed Forward Part

x = layers.LayerNormalization(epsilon=1e-6)(res)

x = layers.Conv1D(filters=ff_dim, kernel_size=1, activation="relu")(x)

x = layers.Dropout(dropout)(x)

x = layers.Conv1D(filters=inputs.shape[-1], kernel_size=1)(x)

return x + res

def build_model(

input_shape,

head_size,

num_heads,

ff_dim,

num_transformer_blocks,

mlp_units,

dropout=0,

mlp_dropout=0,

):

inputs = keras.Input(shape=input_shape)

x = inputs

for _ in range(num_transformer_blocks):

x = transformer_encoder(x, head_size, num_heads, ff_dim, dropout)

x = layers.GlobalAveragePooling1D(data_format="channels_first")(x)

for dim in mlp_units:

x = layers.Dense(dim, activation="relu")(x)

x = layers.Dropout(mlp_dropout)(x)

outputs = layers.Dense(n_classes, activation="softmax")(x)

return keras.Model(inputs, outputs)

input_shape = x_train.shape[1:]

model = build_model(

input_shape,

head_size=256,

num_heads=4,

ff_dim=4,

num_transformer_blocks=4,

mlp_units=[128],

mlp_dropout=0.4,

dropout=0.25,

)

model.compile(

loss=“sparse_categorical_crossentropy”,

optimizer=keras.optimizers.Adam(learning_rate=0.0001),

metrics=[“accuracy”],

)

checkpoint_filepath = “transformer/best_model1.hdf5”

print(os.listdir(“transformer”))

model.summary()

model.load_weights(checkpoint_filepath)

callbacks = [keras.callbacks.EarlyStopping(patience=10, restore_best_weights=True)]

model_checkpoint_callback = tf.keras.callbacks.ModelCheckpoint(checkpoint_filepath, monitor=‘loss’, verbose=1,save_best_only=True, mode=‘auto’, save_freq=‘epoch’,period=1)

model_checkpoint_callback = tf.keras.callbacks.ModelCheckpoint(filepath=‘model.{epoch:02d}-{val_loss:.2f}.h5’)

model_checkpoint_tsboard = tf.keras.callbacks.TensorBoard(log_dir=‘./transformer’)

model.fit(

x_train,

y_train,

validation_split=0.2,

epochs=85,

batch_size=1,

callbacks=[callbacks,model_checkpoint_callback]

)

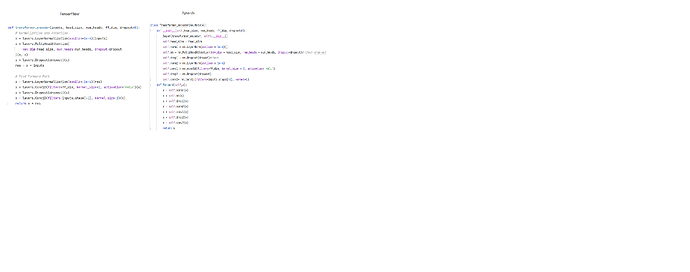

I have tried to convert into pytorch given below:

import torch

import torch.nn as nn

def transformer_encoder(inputs, head_size, num_heads, ff_dim, dropout=0):

# Normalization and Attention

x = nn.LayerNorm(epsilon=1e-6)(inputs)

x = nn.MultiheadAttention(

key_dim=head_size, num_heads=num_heads, dropout=dropout

)(x, x)

x = nn.Dropout(dropout)(x)

res = x + inputs

# Feed Forward Part

x = nn.LayerNormalization(epsilon=1e-6)(res)

x = nn.Conv1D(filters=ff_dim, kernel_size=1, activation="relu")(x)

x = nn.Dropout(dropout)(x)

x = nn.Conv1D(filters=inputs.shape[-1], kernel_size=1)(x)

return x + res

def build_model(

input_shape,

head_size,

num_heads,

ff_dim,

num_transformer_blocks,

mlp_units,

dropout=0,

mlp_dropout=0,

):

inputs = torch.tensor(shape=input_shape)

x = inputs

for _ in range(num_transformer_blocks):

x = transformer_encoder(x, head_size, num_heads, ff_dim, dropout)

x = nn.AvgPool1d(data_format="channels_first")(x)

for dim in mlp_units:

x = nn.linear(dim, activation="relu")(x)

x = nn.Dropout(mlp_dropout)(x)

outputs = nn.linear(n_classes, activation="softmax")(x)

return keras.Model(inputs, outputs)

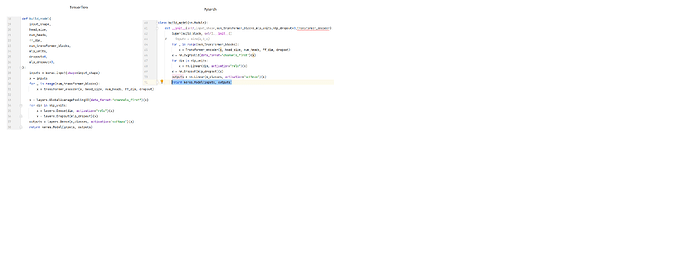

Q1: what is should write at the place of return keras.Model(inputs, outputs)?

Q2: I want to pass data using dataloader pytorch as:

for batch_idx, (images_mls, labels_mls, labels_sec_mls) in enumerate(trainloader_mls):

will this conversion work for it?

Thank you