Hi everyone, I’m Jean Feydy, from the ENS of Paris.

After ~18 months of work, Benjamin Charlier (from Montpellier University), Joan Glaunes (from Paris 5 University) and myself released last week the v1.0 of the KeOps library (“pip install pykeops”), a set of online map-reduce CUDA routines with a PyTorch (+ NumPy, Matlab) interface and full support of automatic differentiation. Our documentation is freely available at:

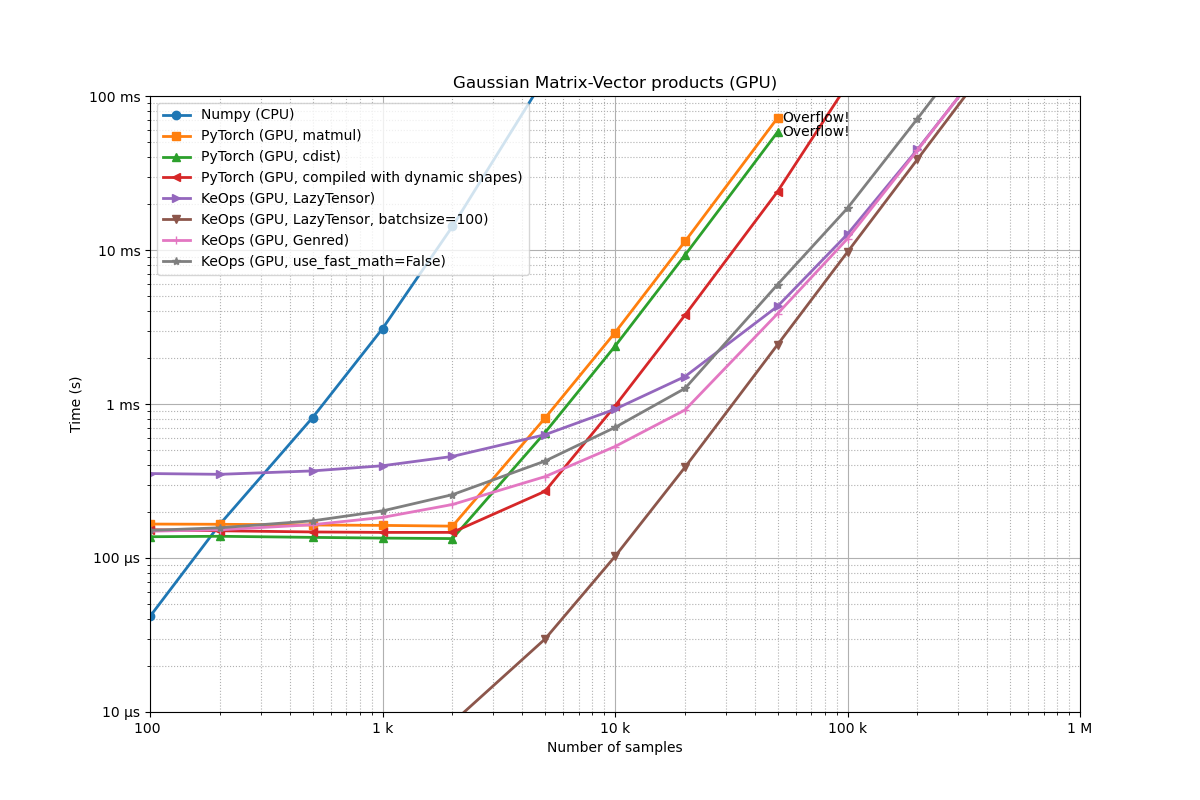

Simply put, this PyTorch extension allows users to compute generic map-reduce operations (Kernel dot products, nearest-neighbour searches, etc.) on large point clouds without ever storing the full “distance matrix” in memory - but with full support of the torch.autograd engine. It is exactly what @vadimkantorov wished for in #9406, and performances are now becoming pretty decent - here, on an RTX 2080:

As we’re targeting a submission to JMLR (software track) by mid-May, we now plan to fully document the C++ inner API, migrate to GitHub (hopefully, as continuous integration is a bit messy…) and add a fully “pythonic” interface to the generic reduction engine, which will allow users of KeOps v1.1 to use expressions such as

from pykeops.torch import tensor as K

x = K( torch.randn(10**6, 1, 3) )

y = K( torch.randn(1, 10**7, 3) )

b = K( torch.randn(1, 10**7, 2) )

D = (( x - y )**2).sum(2) # symbolic expression, *not* a (10**6, 10**7) tensor

K_xy = (-D).exp() # symbolic expression, *not* a (10**6, 10**7) tensor

a = ( K_xy * b ).sum(dim=1) # differentiable torch tensor of shape (10**6, 2)

instead of our (cumbersome) custom syntax.

As of today, we’ve mostly used KeOps for medical shape analysis and super-fast computation of Wasserstein distances (see the geomloss package), but we believe that it could also be used for e.g. Gaussian Process regression and geometric deep learning: I’ve started to get in touch with the developers of GPytorch and pytorch-geometric.

Needless to say, we’d be more than happy to get feedback from PyTorch devs and users outside of our own (math-oriented) community: If you have any idea or feature request, please let us know

Best regards,

Jean