Sample code:

http://paste.ubuntu.com/p/Bw9mgtb9sD/

Running nvprof with the following command:

/usr/local/cuda/bin/nvprof --concurrent-kernels on --print-api-summary --print-gpu-summary --output-profile profile.nvvp -f --profile-from-start off --track-memory-allocations on --demangling on --trace gpu,api python stream.py

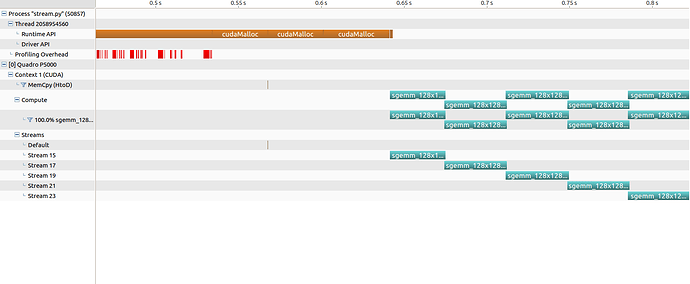

Opening nvvp, I see that the kernels runing on the 5 streams one after the other, instead of all at the same time.

This seems wrong, am I misusing the API or is there some other problem?

System info:

PyTorch version: 1.0.1.post2

Is debug build: No

CUDA used to build PyTorch: 10.0.130

OS: Ubuntu 16.04.6 LTS

GCC version: (Ubuntu 5.4.0-6ubuntu1~16.04.11) 5.4.0 20160609

CMake version: version 3.5.1

Python version: 3.6

Is CUDA available: Yes

CUDA runtime version: Could not collect

GPU models and configuration:

GPU 0: Quadro P5000

GPU 1: Quadro P5000

Nvidia driver version: 418.39

cuDNN version: Could not collect

Versions of relevant libraries:

[pip] numpy==1.15.4

[pip] torch==1.0.1.post2

[pip] torchfile==0.1.0

[pip] torchvision==0.2.2

[conda] blas 1.0 mkl

[conda] mkl 2019.1 144

[conda] mkl_fft 1.0.6 py36hd81dba3_0

[conda] mkl_random 1.0.2 py36hd81dba3_0

[conda] pytorch 1.0.1 py3.6_cuda10.0.130_cudnn7.4.2_2 pytorch

[conda] torchfile 0.1.0 <pip>

[conda] torchvision 0.2.2 py_3 pytorch