Hi,

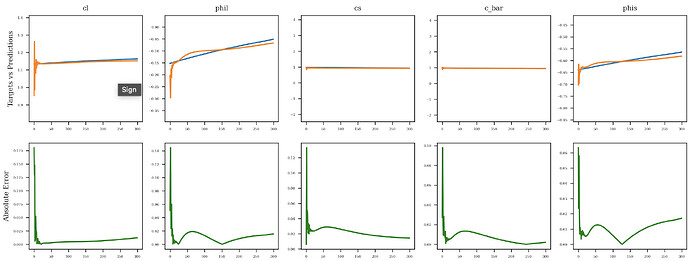

I am trying to use a Seq2Seq model for time series prediction (prediction of some target time series based on other time series). While I managed to get overall good results, for some reason, my model has a poor prediction performance in the initial timesteps. In the figure below, you can see the predictions vs target for 5 of the output time series:

I am not sure, but I suspect that I might be doing something wrong regarding hidden state initialization. Here’s the piece of code I am using for Seq2Seq.

class Encoder(nn.Module):

def __init__(self, config):

super(Encoder, self).__init__()

self.input_size = config.input_size

self.hidden_size = config.gru_hidden_size

self.output_size = config.output_size

self.n_layers = config.n_layers

self.seq_len = config.seq_len

self.config = config

bidirectional = True

self.lstm = nn.GRU(self.input_size, config.gru_hidden_size, config.n_layers, bidirectional=bidirectional)

def forward(self, inputs):

inputs = inputs.permute(1,0,2)

output, hidden = self.lstm(inputs)

return hidden

class Decoder(nn.Module):

def __init__(self, config):

super(Decoder, self).__init__()

self.input_size = config.input_size

self.hidden_size = config.gru_hidden_size

self.output_size = config.output_size

self.n_layers = config.n_layers

self.seq_len = config.seq_len

self.config = config

bidirectional = True

self.lstm = nn.GRU(self.output_size, config.gru_hidden_size, config.n_layers, bidirectional=bidirectional)

if bidirectional:

self.fc = nn.Linear(2*config.gru_hidden_size, config.output_size)

else:

self.fc = nn.Linear(config.gru_hidden_size, config.output_size)

def forward(self, input, hidden):

input = input.unsqueeze(0)

output, hidden = self.lstm(input, hidden)

output = self.fc(output)

return output, hidden

class Seq2Seq(nn.Module):

model_name = "Seq2Seq"

def __init__(self, config):

super(Seq2Seq, self).__init__()

self.encoder = Encoder(config)

self.decoder = Decoder(config)

def forward(self, inputs, targets):

seq_len = targets.shape[1]

hidden = self.encoder(inputs)

preds = torch.zeros(targets.shape)

input = targets[:,0,:]

preds[:,0,:] = input

for t in range(1,seq_len):

output, hidden = self.decoder(input, hidden)

preds[:,t,:] = output

input = output.squeeze(0)

return preds

Does anyone know what I am missing?