zyh3826

September 20, 2022, 6:55am

1

I found the result of torch.nn.LayerNorm equals torch.nn.InstanceNorm1d, why?

batch_size, seq_size, dim = 2, 3, 4

x = torch.randn(batch_size, seq_size, dim)

#layer norm

layer_norm = torch.nn.LayerNorm(dim, elementwise_affine=False)

print('y_layer_norm: ', layer_norm(x))

print('=' * 30)

# custom instance norm

eps: float = 0.00001

mean = torch.mean(x, dim=-1, keepdim=True)

var = var = torch.var(x, dim=-1, keepdim=True, unbiased=False)

print('y_custom: ', (x - mean) / torch.sqrt(var + eps))

print('=' * 30)

# instance norm

instance_norm = torch.nn.InstanceNorm1d(dim, affine=False)

print('y_instance_norm', instance_norm(x))

print('=' * 30)

# follow the description in https://pytorch.org/docs/stable/generated/torch.nn.LayerNorm.html

# For example, if normalized_shape is (3, 5) (a 2-dimensional shape),

# the mean and standard-deviation are computed over the last 2 dimensions of the input (i.e. input.mean((-2, -1)))

mean = torch.mean(x, dim=(-2, -1), keepdim=True)

var = torch.var(x, dim=(-2, -1), keepdim=True, unbiased=False)

print('y_custom1: ', (x - mean) / torch.sqrt(var + esp))

print('=' * 30)

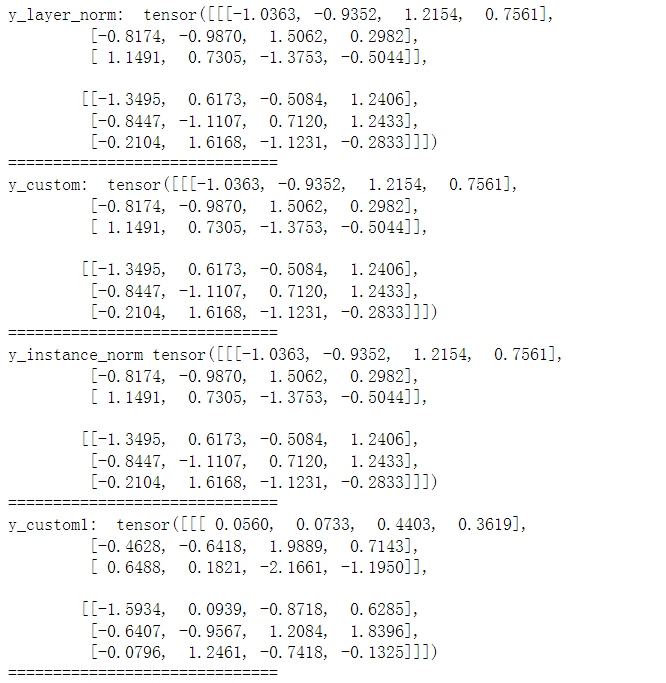

results:

In the results, I found LayerNorm equals InstanceNorm1d, and I custom the compute progress also found that the description in

LayerNorm doc maybe not correct? Do I miss something or LayerNorm and InstanceNorm1d in pytorch are absolutely equal?

Hope someone can answer this question, thanks!

snio89

November 2, 2022, 8:48am

2

This seems like a bug of nn.InstanceNorm1d when affine=False.

opened 09:02AM - 06 Jan 21 UTC

module: nn

triaged

needs design

module: norms and normalization

## 🐛 Bug

When `nn.InstanceNorm1d` is used without affine transformation, it d… oes not warn the user even if the channel size of input is inconsistent with `num_features` parameter. Though the `num_features` won't matter on computing `InstanceNorm(num_features, affine=False)`, I think it should warn the user if the wrong argument/input is being given.

## To Reproduce & Expected behavior

```python

import torch

import torch.nn as nn

# we define an InstanceNorm1d layer without affine transformation, where num_features=7

# note that affine is set False by default

m = nn.InstanceNorm1d(7)

# here, the input with the wrong channel size (3) is given.

input = torch.randn(2, 3, 5)

# the following operation should warn the user, although there's no problem with performing the computation.

output = m(input)

```

I'm not sure whether this is intended behavior or not.

## Environment

PyTorch version: 1.7.0+cu110

Is debug build: True

CUDA used to build PyTorch: 11.0

ROCM used to build PyTorch: N/A

OS: Microsoft Windows 10 Pro

GCC version: (MinGW.org GCC-8.2.0-3) 8.2.0

Clang version: Could not collect

CMake version: version 3.14.3

Python version: 3.6 (64-bit runtime)

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration: GPU 0: GeForce RTX 2060

Nvidia driver version: 460.89

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Versions of relevant libraries:

[pip3] numpy==1.19.3

[pip3] pytorch-lightning==1.0.3

[pip3] pytorch-ranger==0.1.1

[pip3] torch==1.7.0+cu110

[pip3] torch-optimizer==0.0.1a15

[pip3] torchvision==0.8.1+cu110

[conda] Could not collect

cc @albanD @mruberry @jbschlosser

nn.InstanceNorm1d should take an input of the shape (batch_size, dim, seq_size).affine=False, nn.InstanceNorm1d can take an input of the wrong shape (batch_size, seq_size, dim) and provides a LayerNorm-like result.

1 Like