Steps to reproduce:

- Load an alexnet from torchvision

- Change model’s first weight to torch.tensor(float(‘inf’))

- Register forward hook and check the output of first conv layer and first ReLu layer

I speculate this bug was caused by torch’s measure to inf value

Screen Shots:

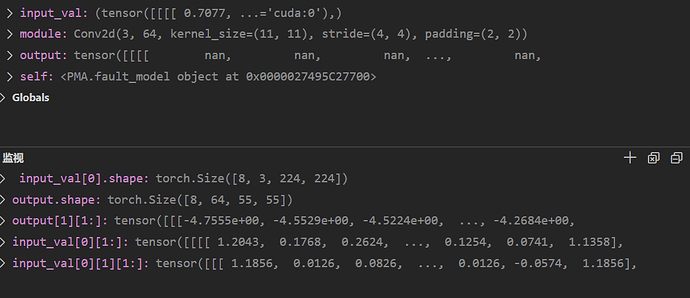

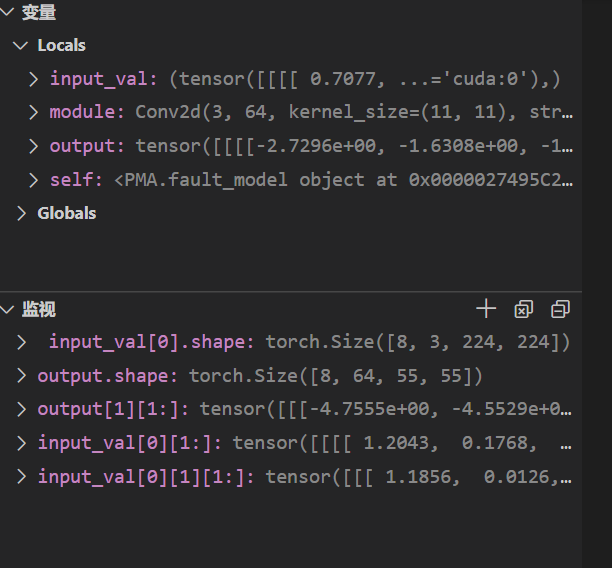

Conv Layer’s output and another parameter:

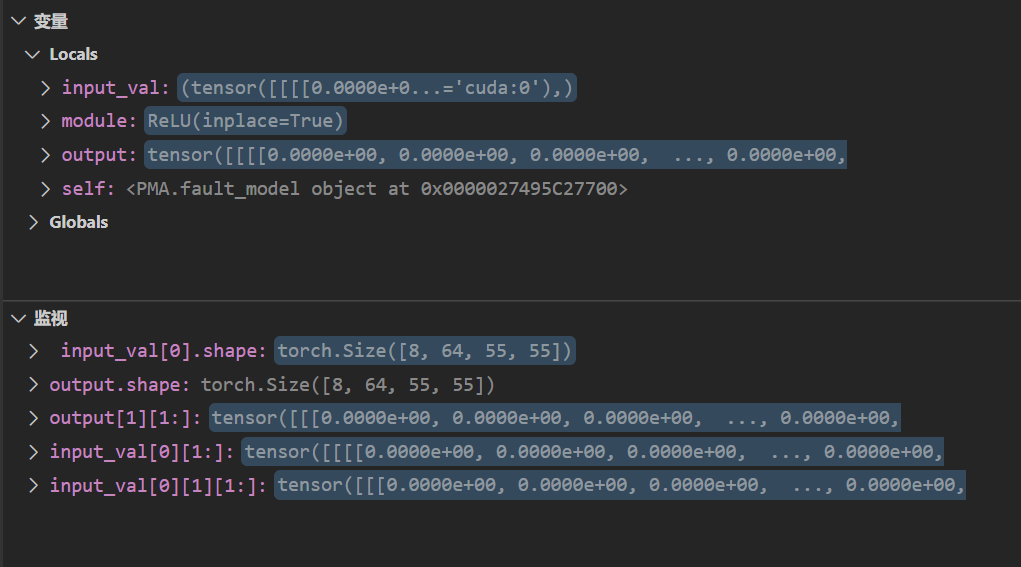

ReLu Layer’s output and another parameter:

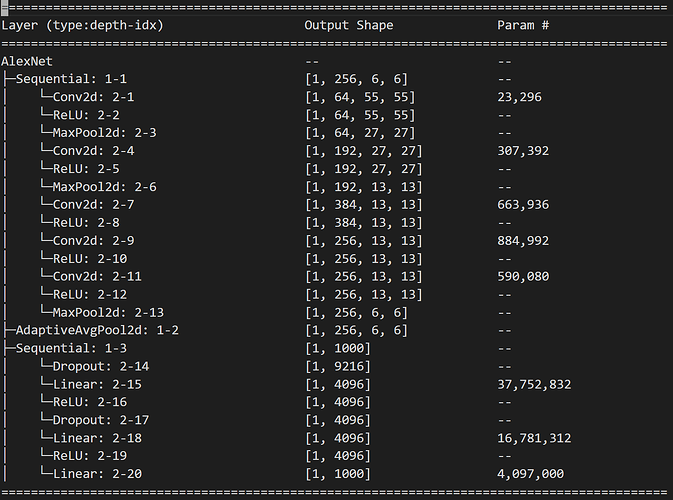

Torchinfo’s summary of AlexNet

Note: input_val was a tuple