import torch

a = torch.rand(5, 5, requires_grad=False)

b = torch.rand(5, 5, requires_grad=False)

c = torch.rand(5, 5, requires_grad=True)

f = a + b

g = b + c

y = f + g

y.backward(torch.ones_like(y))

print(f.is_leaf, f.grad_fn, f.grad)

output: True None None

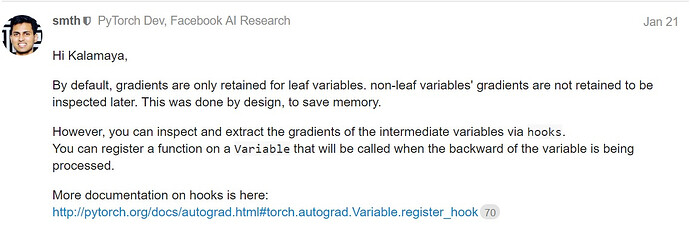

Why f doesn’t have its grad? It is a leaf variable.