Hi guys,

I am wondering if anyone can clearly say difference between

the learning rate decay (Learning rate scheduling) and

Weight decay (L2 regularization)?

I know the learning rate decay is for updating weights slowly or quickly and

the weight decay is to give loss function a penalty for avoid overfitting.

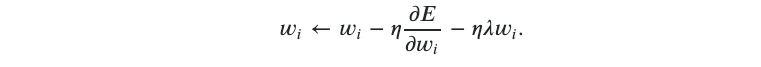

like the photo I’ve attached,

But is it right that those two parameters would affect on updating the weights at the same time?

Then, is it preferable to use lr schedule and weight decay at the same time?

Thanks in advance!!

L2 regularization also called weight decay is one of the regularization methods like (L1 or Dropout) as you have also mentioned ‘avoiding overfitting’

Learning rate decay aims to update learning rate hyperparameter considering time. Because choosing the learning rate is challenging as a value too small may result in a long training process that could get stuck, whereas a value too large may result in learning a sub-optimal set of weights too fast or an unstable training process. We want to take ‘bigger’ steps when we started training but we want to be careful while closing to optimum. That is we don’t want to overshoot to optimum.

Regarding your question, yes you can both of them like L2 to prevent overfitting, learning rate decay not to overshoot.

I hope it is clear a little bit.