Here is my code. I tried your method and got the error: ‘CosineAnnealingLR’ object has no attribute 'get_last_lr

# Run the training batches

def train(train_loader, model, criterion, optimizer, i,trn_corr):

# switch to train mode

MobileNet.train()

epoch = i

#lrs.step()

for b, (images, labels) in enumerate(train_loader):

if torch.cuda.is_available():

images = images.cuda()

labels = labels.cuda()

b+=1

# Apply the model

y_pred = MobileNet(images)

loss = criterion(y_pred, labels)

# Tally the number of correct predictions

train_predicted = torch.max(y_pred.data, 1)[1]

batch_corr = (train_predicted == labels).sum()

trn_corr += batch_corr

accuracy = trn_corr.item()*100/(b*batch)

# Update parameters

optimizer.zero_grad()

loss.backward()

optimizer.step()

lrs.step()

lrs_track.append(lrs.get_last_lr())

# Print interim results

if b%2 == 0:

print(f'epoch: {epoch:2} batch: {b:4} loss: {loss.item():10.8f} \accuracy: {accuracy:3.3f}%')

if b%14 == 0:

train_acc.append(accuracy)

train_losses.append(loss)

train_correct.append(trn_corr)

mean_loss = sum(train_losses)/len(train_losses)

labels = labels.cpu()

train_predicted = train_predicted.cpu()

print(classification_report(labels.view(-1), train_predicted.view(-1), target_names=class_names))

print("\n")

for param_group in optimizer.param_groups:

lr_track.append(param_group['lr'])

print("\n")

def test(test_loader, model, criterion, i,epochs,tst_corr):

# switch to evaluate mode

MobileNet.eval()

# Run the testing batches

with torch.no_grad():

for b, (pics, names) in enumerate(test_loader):

if torch.cuda.is_available():

pics = pics.cuda()

names = names.cuda()

# Apply the model

output = MobileNet(pics)

val_loss = criterion(output, names)

# Tally the number of correct predictions

test_predicted = torch.max(output.data, 1)[1]

tst_corr += (test_predicted == names).sum()

Val_accuracy = tst_corr.item()*100/(test_batch)

# Print Validation results

print(f'test_epoch: {i:2} batch: {b:4} Val_loss: {val_loss.item():10.8f} Val_accuracy: {Val_accuracy:3.3f}%')

test_acc.append(Val_accuracy)

test_losses.append(val_loss)

print("\n")

names = names.cpu()

test_predicted = test_predicted.cpu()

print(classification_report(names.view(-1), test_predicted.view(-1), target_names=class_names))

print("\n")

if i == epochs-1:

names = names.cpu()

test_predicted = test_predicted.cpu()

arr = confusion_matrix(names.view(-1), test_predicted.view(-1))

df_cm = pd.DataFrame(arr, class_names, class_names)

plt.figure(figsize = (9,6))

sn.heatmap(df_cm, annot=True, fmt="d", cmap='BuGn')

plt.xlabel("prediction")

plt.ylabel("True label")

plt.show();

if __name__ == '__main__':

torch.manual_seed(2)

MobileNet = models.mobilenet_v2(num_classes= 3, pretrained = True)

class_names = ['cat', ' ndog', ' horse']

Cat = 2*(108 /108) #was 2*(253/253)

dog = 108/396

horse = 108/396

weights = [cat, dog, horse]

class_weights=torch.FloatTensor(weights)

criterion = nn.CrossEntropyLoss(weight = class_weights)

#criterion = nn.CrossEntropyLoss()

if torch.cuda.is_available():

MobileNet.cuda()

class_weights = class_weights.cuda()

criterion = criterion.cuda()

learning_rate = 0.001

optimizer = torch.optim.SGD(MobileNet.parameters(), lr = learning_rate, weight_decay = 4e-5, momentum= 0.9)

#optimizer = torch.optim.Adam(MobileNet.parameters(), lr = learning_rate, weight_decay = 4e-5)

train_transform = transforms.Compose([

transforms.RandomResizedCrop(224,scale = (0.2,1.0)),

#transforms.RandomRotation(15), # rotate +/- 10 degrees

transforms.RandomHorizontalFlip(), # reverse 50% of images

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],

[0.229, 0.224, 0.225])

])

test_transform = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406],

[0.229, 0.224, 0.225])

])

train_data = datasets.ImageFolder('C:/Users/deonh/Pictures/Summer/Dark/train', transform=train_transform)

test_data = datasets.ImageFolder('C:/Users/deonh/Pictures/Summer/Dark/val', transform=test_transform)

batch=64

test_batch = len(test_data)

train_loader = DataLoader(train_data, batch_size=batch, shuffle=True)

test_loader = DataLoader(test_data, batch_size=batch, shuffle=False)

if torch.cuda.is_available():

#train_loader = DataLoader(train_data,batch_size=batch,num_workers = 4, shuffle=True, pin_memory = True)

train_loader = DataLoader(train_data,sampler=ImbalancedDatasetSampler(train_data), batch_size=batch,num_workers = 4, shuffle=False, pin_memory = True)

test_loader = DataLoader(test_data, batch_size=test_batch, num_workers = 4, shuffle=False, pin_memory = True)

Q = math.floor(len(train_data)/batch)

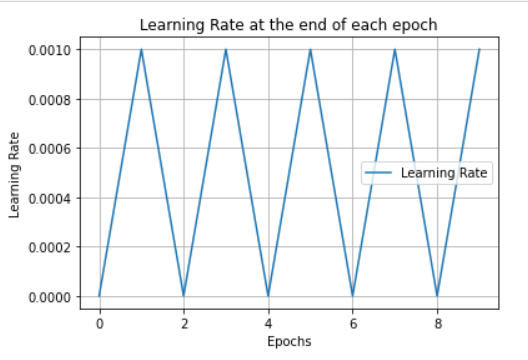

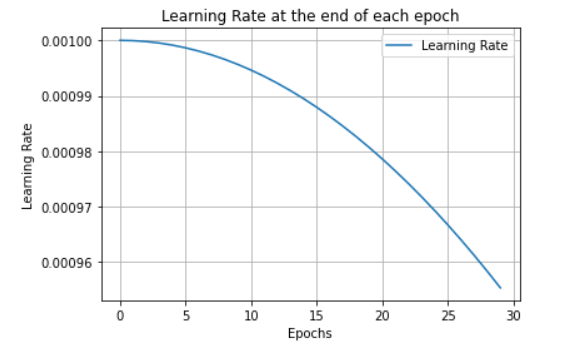

lrs = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max = 900)

epoch = 5

train_losses = []

test_losses = []

train_correct = []

test_correct = []

train_acc = []

test_acc = []

lr_track = []

lrs_track = []

start_time =time.time()

for i in range(epoch):

trn_corr = 0

tst_corr = 0

#Run training for one epoch

train(train_loader, MobileNet, criterion, optimizer, i,trn_corr)

#evaluate the validation/test

test(test_loader, MobileNet, criterion, i, epoch,tst_corr)

#lrs.step()

print("%s hours" %((time.time() - start_time)/3600))

torch.save(MobileNet.state_dict(), 'MobileNetV2.pt')