Here is my problem setting:

I have a CSV file with 700 entries that has image names as well as the class they belong to (9 classes) as well as a confidence score (for if they belong to that class) that lies between 0-10 (discrete values). I want to report the train/test accuracy using leave-one-out cross-validation. Here are my questions:

-

What representation of images should I use for leave-one-out CV? I understand what I am doing is now more of a machine learning problem but should I extract the last layer of ResNet50 for feature representation of images (a vector of length 2048) or what other method do you suggest for representing images?

-

Should I just use the sklearn leave-one-out CV or should I use Skorch or maybe another suggested lib/tool?

-

Firstly, I am only interested to see if using the images (or some sort of image representation such as resnet feature vec) can I detect these 9 categories. Basically, how do I feed in vectors as a representative of each data point to sklearn/skorch? However, looking at this tutorial or many similar tutorial, they deal with different types of data (rather than feature vector). How should I do LOO CV on my type of data?

-

Secondly, I am interested to know how I should incorporate the confidence score for the labels to my problem? Should I concatenate the confidence score to my vector of length 2048 (intuitively, this doesn’t seem right to me). Is there a similar problem setting in which we have confidence score for labels and how should I proceed with leave-one-out cross-validation when I have confidence scores too?

-

This question is either wrong or rather out-of-place. Can I do transfer learning using LOO CV? I am asking because out of these 9 classes, four of my classes are severely misrepresented. Ex. Class 1 has only 3 images and class 4 only 12 images while class 3 has 170 images and class 7 and 5 have 80 and 50 images respectively. I mean if I could combine transfer learning and LOO CV for my severely imbalanced dataset, I would not need to do the first four steps. Please let me know if any such thing is feasible at all.

Edits:

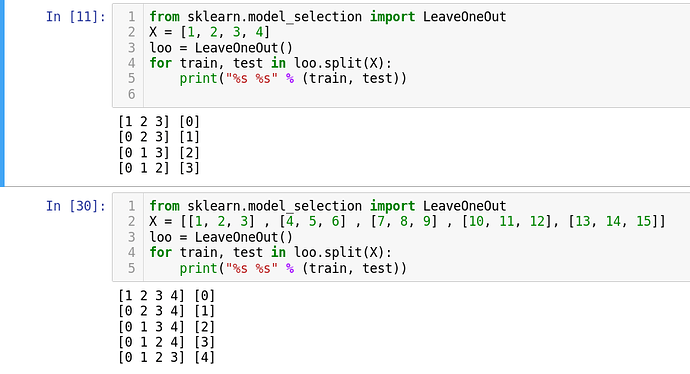

I get that we get the index of which sample we want to use using LOOV CV and also the fact the we could create the feature vector using ResNet50 (here I created a dummy vector for each X[i] as in X[0]=[1, 2, 3]

Edit2:

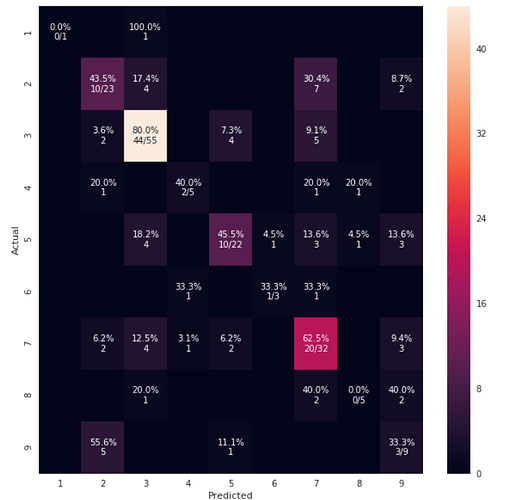

I have made some progress. So, I was able to draw the confusion matrix for 9 classes (still have not involved the confidence score for the class labels) using train/test split method from sklearn and multinomial logistic regression. However, even though I am able to report the accuracy using LOOCV method of sklearn, I do not know how to create the confusion matrix here.

More detail of that here: python - Confusion Matrix for Leave-One-Out Cross Validation in sklearn - Stack Overflow

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn import metrics

loocv = model_selection.LeaveOneOut()

model = LogisticRegression()

results = model_selection.cross_val_score(model, feature_vectors, y, cv=loocv)

print("Accuracy: %.3f%% (%.3f%%)" % (results.mean()*100.0, results.std()*100.0))

Accuracy: 59.615% (49.067%)

How do I actually create the confusion matrix for LOOCV?