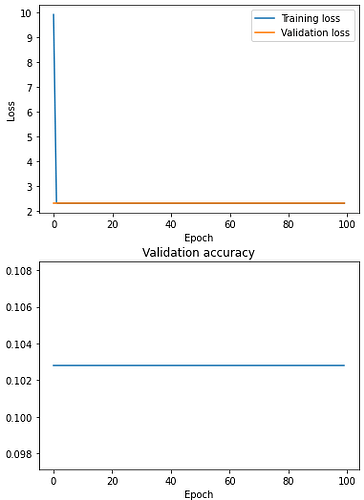

Hello guys, I am trying to build a LeNet5 network to train a classifier for MNIST dataset, but the network seems not to be learning. I have printed the gradients and they are not always zero, but very small. The loss curve as well as the accuracy curve is almost like a flat line when learning rate is small (1e-1) and behaves in a random way when learning rate is very large (1e2)

Here is my code for the model:

import torch.nn as nn

from torch.nn import functional as F

from keras.datasets import mnist

from torch.optim import SGD, Adam

from torch.utils.data import TensorDataset, DataLoader

import torch

import time

import matplotlib.pyplot as plt

class LeNet5_MNIST(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(1, 6, 5, padding=2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = x.unsqueeze(1)

x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2))

x = F.max_pool2d(F.relu(self.conv2(x)), (2, 2))

x = x.view(-1, self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

def num_flat_features(self, x):

size = x.size()[1:]

num_features = 1

for s in size:

num_features *= s

return num_features

And for training:

net = LeNet5_MNIST()

(X_train, y_train), (X_test, y_test) = mnist.load_data()

device = torch.device("cuda")

net.to(device)

n_epochs = 100

batch_size = 64

momentum = 0.9

lr = 1e-1

X_train = torch.tensor(X_train, device=device, dtype=torch.float)

y_train = torch.tensor(y_train, device=device, dtype=torch.int64)

X_test = torch.tensor(X_test, device=device, dtype=torch.float)

y_test = torch.tensor(y_test, device=device, dtype=torch.int64)

train_ds = TensorDataset(X_train, y_train)

train_dl = DataLoader(train_ds, batch_size=batch_size)

valid_ds = TensorDataset(X_test, y_test)

valid_dl = DataLoader(valid_ds, batch_size=batch_size)

optimizer = Adam(net.parameters(), lr = lr)

#optimizer = SGD(net.parameters(), lr=lr, momentum=momentum)

optimizer.zero_grad()

loss_func = nn.CrossEntropyLoss()

split_line = '-' * 50

split_line_bold = '=' * 50

start_time = time.time()

losses = []

valid_losses = []

accuracy = []

print(f"Training on {device}")

print(split_line_bold)

a = 0

for epoch in range(n_epochs):

batch_loss = []

net.train()

for xb, yb in train_dl:

y = net(xb)

loss = F.cross_entropy(y, yb)

loss.backward()

optimizer.step()

optimizer.zero_grad()

batch_loss += [loss.item()]

losses += [sum(batch_loss)/len(batch_loss)]

print(f"Epoch {epoch+1}:")

print(f"Training loss {sum(batch_loss)/len(batch_loss)}")

net.eval()

with torch.no_grad():

valid_loss = sum(loss_func(net(xb), yb) for xb, yb in valid_dl)

out = net(X_test)

y_pre = torch.argmax(out, dim=1)

acc = (y_pre == y_test).float().mean()

valid_loss /= len(valid_dl)

valid_losses += [valid_loss]

accuracy += [acc]

print(f"Validation loss: {valid_loss}")

print(f"Validation accuracy: {acc}")

print(split_line)

end_time = time.time()

Evaluation code and results:

training_time = end_time - start_time

print("Training finished in %.2f seconds" % training_time)

fig, ax = plt.subplots()

ax.plot(list(range(n_epochs)), losses, label="Training loss")

ax.plot(list(range(n_epochs)), valid_losses, label="Validation loss")

ax.set_xlabel('Epoch')

ax.set_ylabel('Loss')

ax.legend()

fig.show()

fig, ax = plt.subplots()

ax.plot(list(range(n_epochs)), accuracy)

ax.set_xlabel('Epoch')

ax.set_ylabel('Accuracy')

ax.set_title('Validation accuracy')

fig.show()