I use NVTX and Nsight when training with PyTorch and the level of detail from traces is just outstanding, those system details have been critical to my research. Kudos to the team on such great work!

My question is, how can I reuse some of this infrastructure for a Python/CUDA project? That is, how do I get up and running to leverage, maybe a minimal subset, torch’s NVTX scaffolding?

For example,

- I would love to have complete function tracing, where any Python/C++ function is traced all the way down including all sub-function calls.

- Also, seq and op_id fields on ranges are great to have.

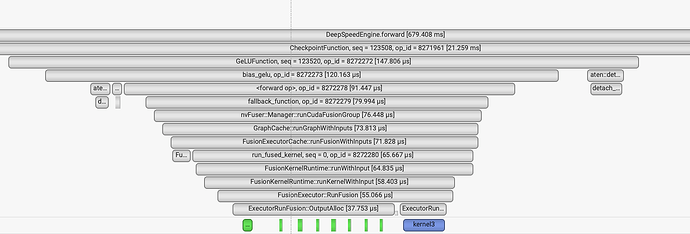

Representative examples are shown below. That level of detail, I would love to have ![]()

All the way down

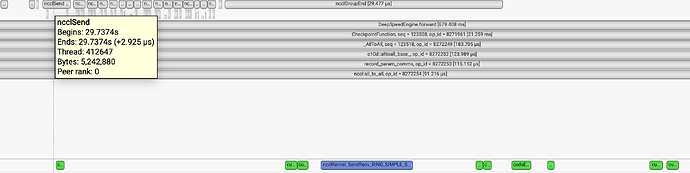

Note the bytes field from the NCCL call