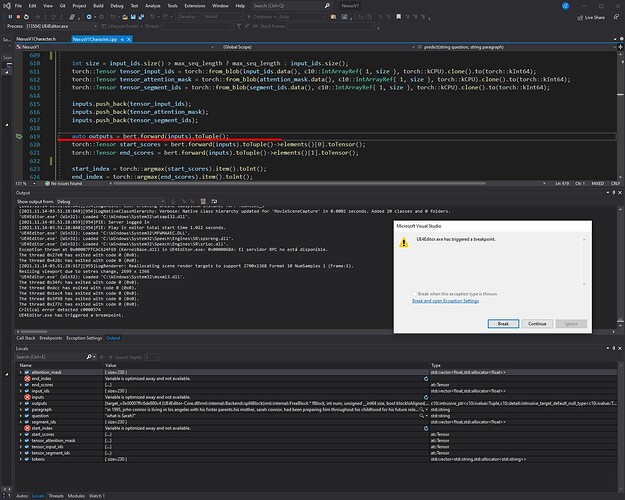

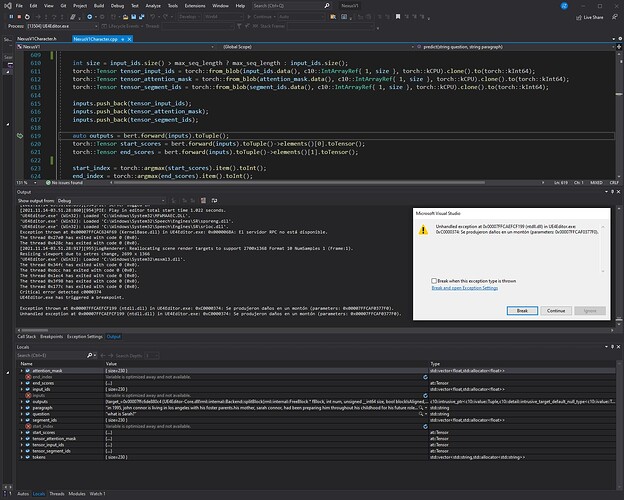

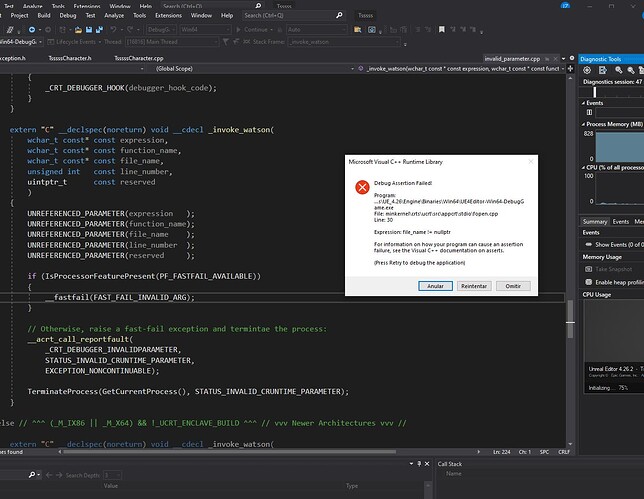

I am trying to run my BERT model in Unreal engine with Libtorch. However, at runtime I get the following error when I run the forward function: Variable is optimized away and not avaible.

I use: LibTorch 1.10, Visual Studio C++ 2019 and Unreal Engine 4 version 4.26.2. Note: If I use only use LibTorch 1.10 and Visual Studio C ++ 2019 in release mode, my program run fine.

Please, could someone give me a suggestion on how to overcome this runtime error?

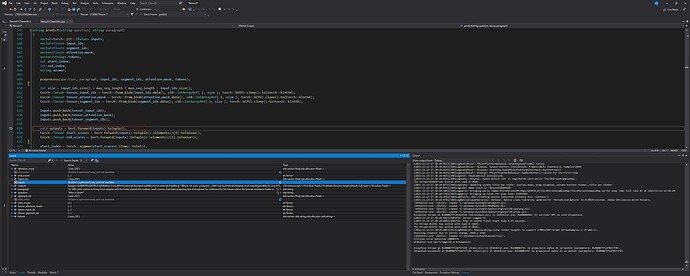

This is my function where ocurrs the error:

string predict(string question, string paragraph)

{

vectortorch::jit::IValue inputs;

vector input_ids;

vector segment_ids;

vector attention_mask;

vector tokens;

int start_index;

int end_index;

string answer;

preprocess(question, paragraph, input_ids, segment_ids, attention_mask, tokens);

int size = input_ids.size() > max_seq_length ? max_seq_length : input_ids.size();

torch::Tensor tensor_input_ids = torch::from_blob(input_ids.data(), c10::IntArrayRef{ 1, size }, torch::kCPU).clone().to(torch::kInt64);

torch::Tensor tensor_attention_mask = torch::from_blob(attention_mask.data(), c10::IntArrayRef{ 1, size }, torch::kCPU).clone().to(torch::kInt64);

torch::Tensor tensor_segment_ids = torch::from_blob(segment_ids.data(), c10::IntArrayRef{ 1, size }, torch::kCPU).clone().to(torch::kInt64);

inputs.push_back(tensor_input_ids);

inputs.push_back(tensor_attention_mask);

inputs.push_back(tensor_segment_ids);

auto outputs = bert.forward(inputs).toTuple();

torch::Tensor start_scores = bert.forward(inputs).toTuple()->elements()[0].toTensor();

torch::Tensor end_scores = bert.forward(inputs).toTuple()->elements()[1].toTensor();

start_index = torch::argmax(start_scores).item().toInt();

end_index = torch::argmax(end_scores).item().toInt();

for (int i = start_index; i < end_index + 1; i++) {

if (tokens[i].substr(0, 2) == "##") {

answer = answer + tokens[i].substr(2, tokens[i].length() - 2);

}

else

answer = answer + " " + tokens[i];

}

if (answer.empty() || answer.substr(1, 6) == "[CLS]") {

if LANGUAGE

answer = "Unable to find the answer to your question.";

#else

answer = “Lo siento, no puedo responder a tu pregunta.”;

#endif

}

return answer;

}