Hello Pytorch Team,

I have an application running using Libtorch + TorchTensorrt.

For this, I create input by first allocating a tensor of shape BCHW on GPU and then writing values into the pixels. However, I see this is super slow - slower than Python when copying data from numpy array.

So, I was wondering if using a pinned memory/ zero copy memory would help???

In any case, I would like to know how can I create tensors with pinned and zero-copy memory models?

Also, if I pre-allocate such an array on GPU using normal CUDA APIs, is it possible to then later copy it to libtorch tensor?

Please help me understand this scenario.

Summary - What is the fastest way to create/ copy data to GPU in Libtorch?

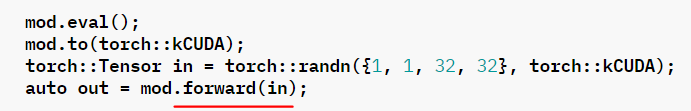

Apart from this - a remark: The documentation about Torch-Tensorrt seems to be heavily outdated.

This is on the main documentation page and it would probably not work as the input should be of IValue type - this can be found in other pages of documentation.

Please update the information if this is indeed the case.

Best Regards

Sambit