Here is my full code.

data_dir = "../cocoapi/images"

ann_dir = "../cocoapi/annotations"

#Define transforms for the data

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

train_transforms= transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

normalize])

val_transforms= transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

normalize])

#Pass transforms in here, then run the next cells to see how the transforms look

train_data = torchvision.datasets.CocoDetection(data_dir + '/train2017', ann_dir + '/instances_train2017.json', transform=train_transforms, target_transform=None, transforms=None)

val_data= torchvision.datasets.CocoDetection(data_dir + '/val2017', ann_dir + '/instances_val2017.json', transform=val_transforms, target_transform=None, transforms=None)

train_loader = torch.utils.data.DataLoader(train_data, batch_size= 64 , shuffle= True)

val_loader = torch.utils.data.DataLoader(val_data, batch_size= 64 , shuffle= True)

print('Num training images: ', len(train_data))

print('Num validation images: ', len(val_data))

loading annotations into memory…

Done (t=12.49s)

creating index…

index created!

loading annotations into memory…

Done (t=0.43s)

creating index…

index created!

Num training images: 118287

Num validation images: 5000

model = models.resnet50(pretrained= True)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

#Freeze our feature parameters

for param in model.parameters():

param.requires_grad = False

classifier= nn.Sequential(

nn.Linear(2048,512),

nn.ReLU(),

nn.Dropout(p=0.2),

nn.Linear(512,80),

nn.LogSoftmax(dim=1))

model.fc= classifier

criterion= nn.NLLLoss()

optimizer= optim.Adam(model.fc.parameters(), lr=0.003)

model.to(device);

cpu

epochs=25

steps=0

running_loss=0

print_every=5

for epoch in range (epochs):

for images,labels in train_loader:

steps+=1

images,labels = images.to(device), labels.to(device)

optimizer.zero_grad()

logps= model(images)

loss= criterion(logps,labels)

loss.backward()

optimizer.step()

running_loss+=loss.item()

if step % print_every == 0:

model.eval()

val_loss=0

accuracy=0

for images,labels in val_loader:

images,labels = images.to(device), labels.to(device)

logps= model(images)

loss= criterion(logps,labels)

val_loss+=loss.item()

#Calculate our accuracy

ps=torch.exp(logps)

top_ps, top_class = ps.topk(1, dim=1)

equality= top_class == labels.view(*top_class.shape)

accuracy+=torch.mean(equality.type(torch.FloatTensor))

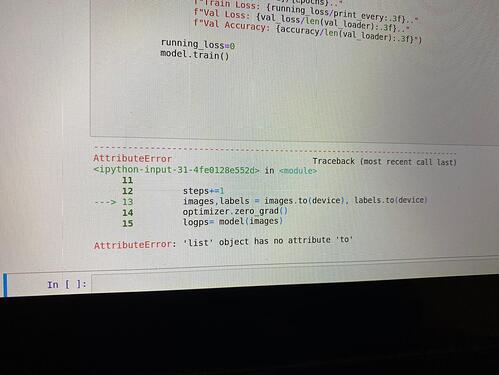

print(f"Epoch: {epoch+1}/{epochs}.."

f"Train Loss: {running_loss/print_every:.3f}.."

f"Val Loss: {val_loss/len(val_loader):.3f}.."

f"Val Accuracy: {accuracy/len(val_loader):.3f}")

running_loss=0

model.train()

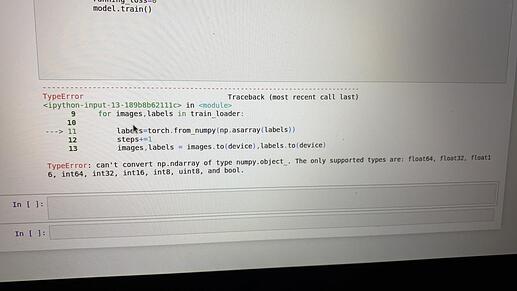

And the error occur in this line images,labels = images.to(device), labels.to(device)

labels is a list, and list object has no attribute ‘to’