Hi Guys,

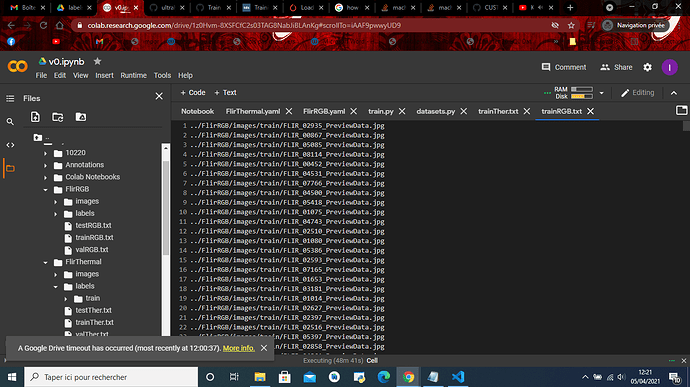

so, I want to train my model on two datasets (RGB and thermal images)  , and I want to pick batches in the same order with shuffle=True.

, and I want to pick batches in the same order with shuffle=True.

i already have a function create_dataloader :

def create_dataloader(path, imgsz, batch_size, stride, opt, hyp=None, augment=False, cache=False, pad=0.0, rect=False,

rank=-1, world_size=1, workers=8, image_weights=False, quad=False, prefix='', shuffle=True):

# Make sure only the first process in DDP process the dataset first, and the following others can use the cache

with torch_distributed_zero_first(rank):

dataset = LoadImagesAndLabels(path, imgsz, batch_size,

augment=augment, # augment images

hyp=hyp, # augmentation hyperparameters

rect=rect, # rectangular training

cache_images=cache,

single_cls=opt.single_cls,

stride=int(stride),

pad=pad,

image_weights=image_weights,

prefix=prefix,

shuffle=shuffle)

batch_size = min(batch_size, len(dataset))

nw = min([os.cpu_count() // world_size, batch_size if batch_size > 1 else 0, workers]) # number of workers

sampler = torch.utils.data.distributed.DistributedSampler(dataset) if rank != -1 else None

loader = torch.utils.data.DataLoader if image_weights else InfiniteDataLoader

# Use torch.utils.data.DataLoader() if dataset.properties will update during training else InfiniteDataLoader()

dataloader = loader(dataset,

batch_size=batch_size,

num_workers=nw,

sampler=sampler,

pin_memory=True,

collate_fn=LoadImagesAndLabels.collate_fn4 if quad else LoadImagesAndLabels.collate_fn)

return dataloader, dataset

i’m trying to create 2 data loader for each datasets like that :

Trainloader

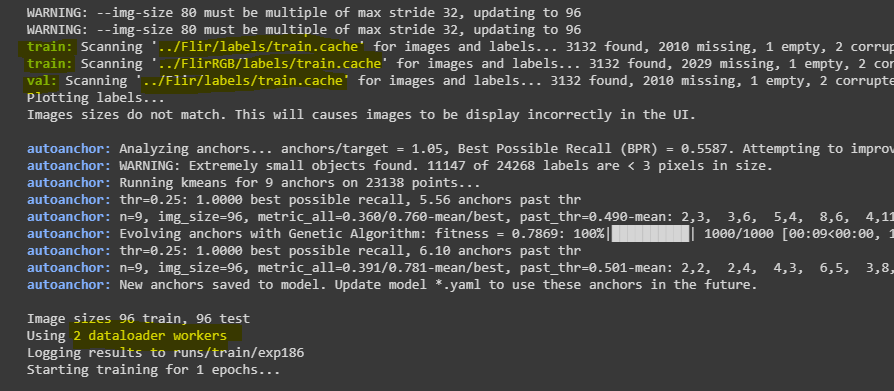

dataloader1, dataset1 = create_dataloader("../Flir", imgsz, batch_size, gs, opt,

hyp=hyp, augment=True, cache=opt.cache_images, rect=opt.rect, rank=rank,

world_size=opt.world_size, workers=opt.workers,

image_weights=opt.image_weights, quad=opt.quad, prefix=colorstr('train: '), shuffle=True)

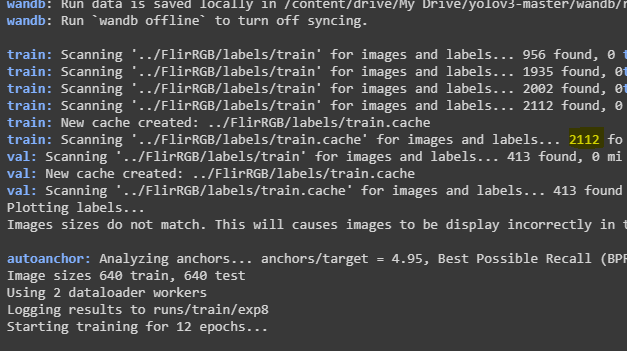

dataloader2, dataset2 = create_dataloader("../FlirRGB", imgsz, batch_size, gs, opt,

hyp=hyp, augment=True, cache=opt.cache_images, rect=opt.rect, rank=rank,

world_size=opt.world_size, workers=opt.workers,

image_weights=opt.image_weights, quad=opt.quad, prefix=colorstr('train: '), shuffle=True)

But i didn’t have a right solution.

can you help me please.

, and I want to pick batches in the same order with shuffle=True.

, and I want to pick batches in the same order with shuffle=True.