I’m implementing an algorithm which requires a lot of model evaluations. I want to parallelize model evaluations by using CPU. The code segment I want to parallelize is here (simplified for readability):

def test_img(network, datatest):

network.eval()

data_loader = DataLoader(datatest, batch_size=args.bs)

for idx, (data, target) in enumerate(data_loader):

result = network(data)

And for one evaluation it takes around 1.5s on CPU. The networks for evaluation are different, but the dataset is the same. I tried to use joblib.Parallel to parallelize this process:

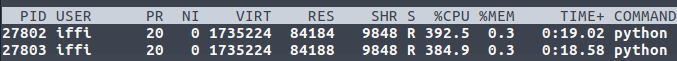

results = Parallel(n_jobs=num_cpu, prefer="threads")(delayed(test_img)(network_lst[i], dataset) for i in range(N))

However, it seems that there are no improvement by using this method (it will take 15s for 10 evaluations). I specifically count the runtime for result = network(data) line, and it takes 8 seconds. Therefore I think neither the network evaluation nor the dataloader are parallelized. Are there any way for us to load network and data to a specific CPU core, like data.to('cpu:0')? Is it even possible to use CPU for model evaluation parallelization?

Any suggestions are appreciated!