load_state_dict encounter strange problem when loading weights of attributes defined by setattr.

Specifically, I defined a model with the below attributes defined by setattr:

for freq_bias_u, freq_bias_v in zip(self.freq_biases_u, self.freq_biases_v):

setattr(self, f'upsampler_{freq_bias_u:.4f}_{freq_bias_v:.4f}_r', up(num_feat=num_feat, num_out_ch=num_out_ch))

setattr(self, f'upsampler_{freq_bias_u:.4f}_{freq_bias_v:.4f}_i', up(num_feat=num_feat, num_out_ch=num_out_ch))

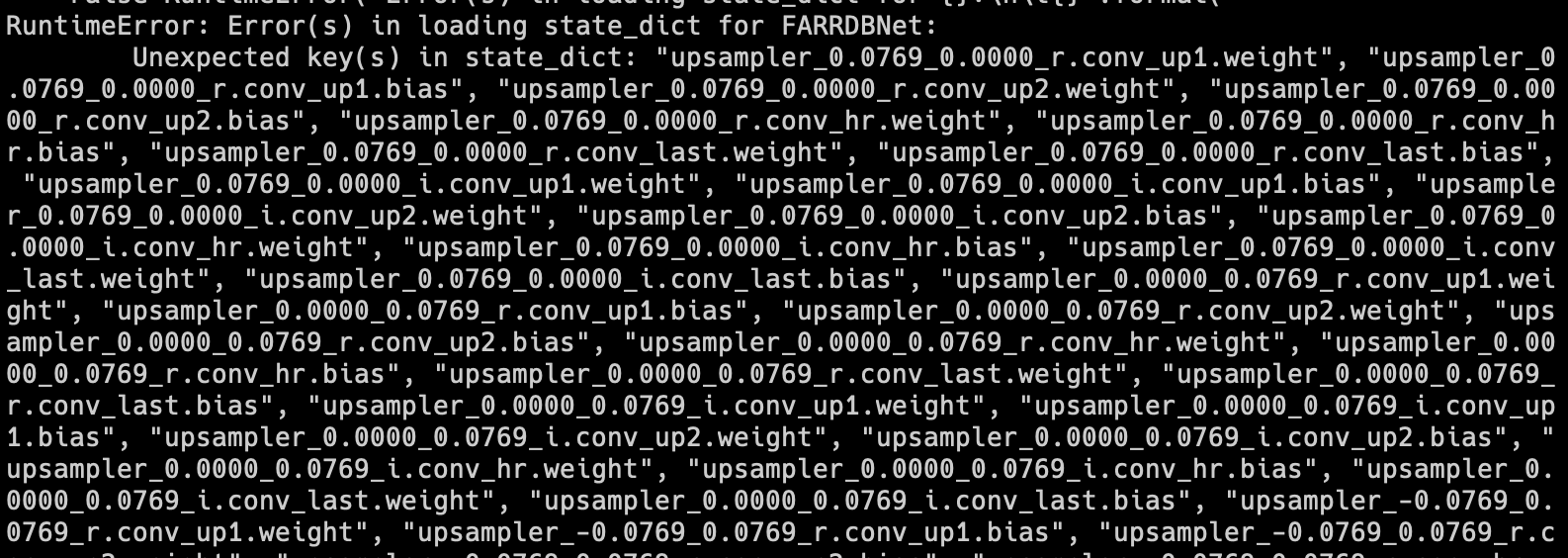

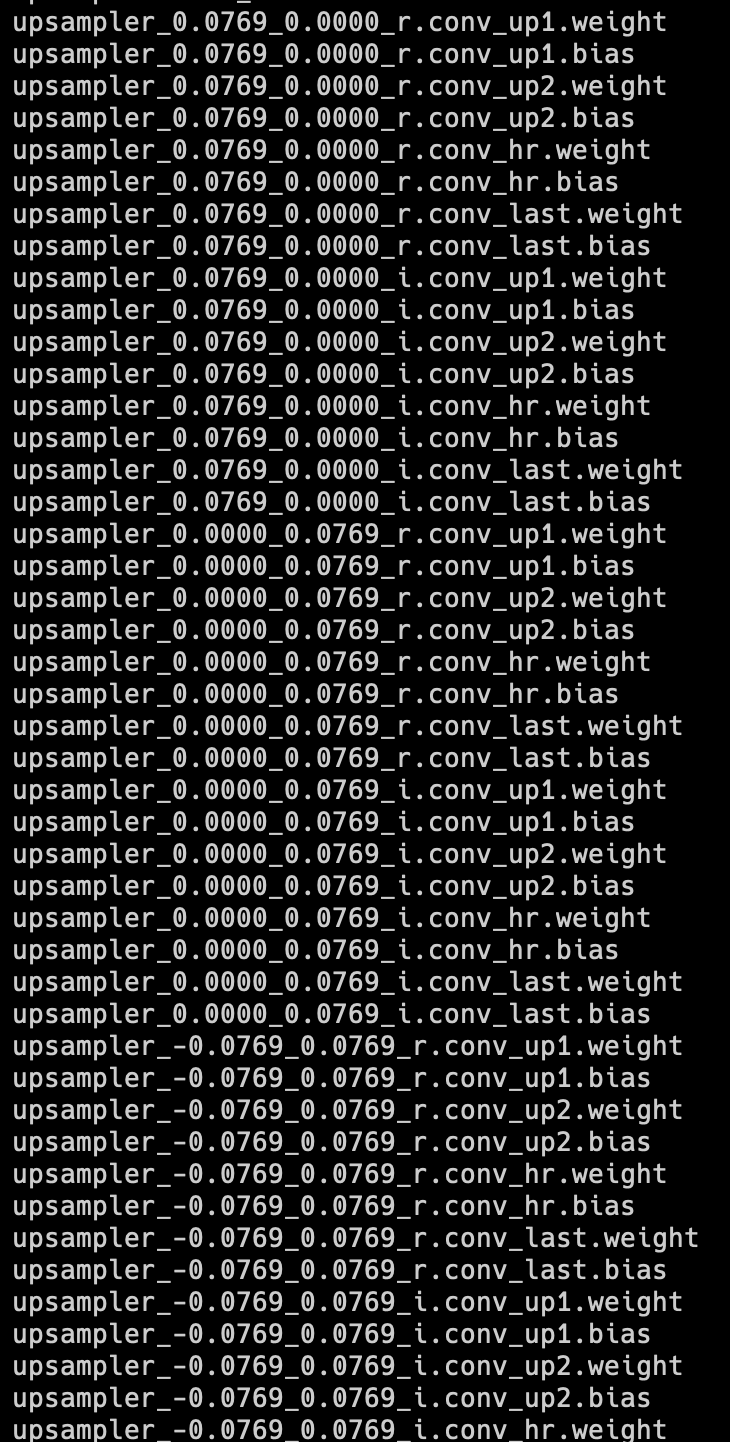

However, when I load the saved model from the checkpoint, I encounter the following error:

I’m sure my model has these parameters as confined by print named_parameters of the model.