Hi All

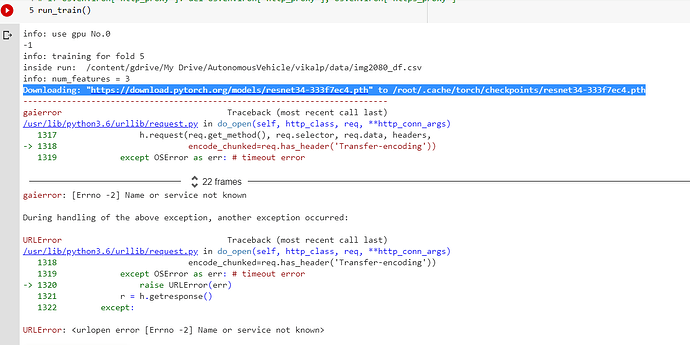

I am very new to deep learning and pytorch. i am trying to lead the resnet34 model and it fails with 403 forbidden error. I am using Google Colab as the environment for notebooks.

resnet = torchvision.models.resnet34(pretrained=True)

Downloading: "https://download.pytorch.org/models/resnet34-333f7ec4.pth" to /root/.cache/torch/checkpoints/resnet34-333f7ec4.pth

---------------------------------------------------------------------------

HTTPError Traceback (most recent call last)

<ipython-input-16-9b84e690c25c> in <module>()

1 get_ipython().system('rm -rf /root/.cache/torch')

----> 2 resnet = torchvision.models.resnet34(pretrained=True)

9 frames

/usr/local/lib/python3.6/dist-packages/torchvision/models/resnet.py in resnet34(pretrained, progress, **kwargs)

247 """

248 return _resnet('resnet34', BasicBlock, [3, 4, 6, 3], pretrained, progress,

--> 249 **kwargs)

250

251

/usr/local/lib/python3.6/dist-packages/torchvision/models/resnet.py in _resnet(arch, block, layers, pretrained, progress, **kwargs)

221 if pretrained:

222 state_dict = load_state_dict_from_url(model_urls[arch],

--> 223 progress=progress)

224 model.load_state_dict(state_dict)

225 return model

/usr/local/lib/python3.6/dist-packages/torch/hub.py in load_state_dict_from_url(url, model_dir, map_location, progress, check_hash)

490 sys.stderr.write('Downloading: "{}" to {}\n'.format(url, cached_file))

491 hash_prefix = HASH_REGEX.search(filename).group(1) if check_hash else None

--> 492 download_url_to_file(url, cached_file, hash_prefix, progress=progress)

493

494 # Note: extractall() defaults to overwrite file if exists. No need to clean up beforehand.

/usr/local/lib/python3.6/dist-packages/torch/hub.py in download_url_to_file(url, dst, hash_prefix, progress)

389 # We use a different API for python2 since urllib(2) doesn't recognize the CA

390 # certificates in older Python

--> 391 u = urlopen(url)

392 meta = u.info()

393 if hasattr(meta, 'getheaders'):

/usr/lib/python3.6/urllib/request.py in urlopen(url, data, timeout, cafile, capath, cadefault, context)

221 else:

222 opener = _opener

--> 223 return opener.open(url, data, timeout)

224

225 def install_opener(opener):

/usr/lib/python3.6/urllib/request.py in open(self, fullurl, data, timeout)

530 for processor in self.process_response.get(protocol, []):

531 meth = getattr(processor, meth_name)

--> 532 response = meth(req, response)

533

534 return response

/usr/lib/python3.6/urllib/request.py in http_response(self, request, response)

640 if not (200 <= code < 300):

641 response = self.parent.error(

--> 642 'http', request, response, code, msg, hdrs)

643

644 return response

/usr/lib/python3.6/urllib/request.py in error(self, proto, *args)

568 if http_err:

569 args = (dict, 'default', 'http_error_default') + orig_args

--> 570 return self._call_chain(*args)

571

572 # XXX probably also want an abstract factory that knows when it makes

/usr/lib/python3.6/urllib/request.py in _call_chain(self, chain, kind, meth_name, *args)

502 for handler in handlers:

503 func = getattr(handler, meth_name)

--> 504 result = func(*args)

505 if result is not None:

506 return result

/usr/lib/python3.6/urllib/request.py in http_error_default(self, req, fp, code, msg, hdrs)

648 class HTTPDefaultErrorHandler(BaseHandler):

649 def http_error_default(self, req, fp, code, msg, hdrs):

--> 650 raise HTTPError(req.full_url, code, msg, hdrs, fp)

651

652 class HTTPRedirectHandler(BaseHandler):

HTTPError: HTTP Error 403: Forbidden

, hope we can download model again soon…

, hope we can download model again soon…