I am using a ResNet18 in a continual learning setting. In such a setting, we train the model on multiple tasks. so after training task_1, we use the weights of the trained model and start training task_2, and so on. In such a setting I would like to be able to start training from a specific task, aka, use the checkpoints of a model trained on task_n and the information about the coreset, I start training from task_n+1 by loading the checkpoint. What I expect is to see the exact same results compared to when I continue training from task_n to task_n+1 because the seeds are set and determinism is on. However, I see slightly different results when plotting accuracy and loss over the training and test sets. When I run my experiments twice, both times from task_0 to task_n, I get identical results.

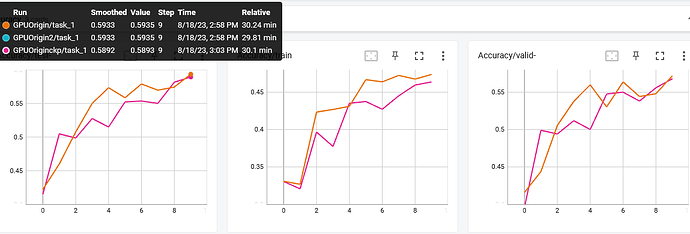

In the above figure, you can see that two runs, GPUoriginal and GPUoriginal2 show identical results on task_1 (we start from task_0). Still, GOUoriginalckp, when using the checkpoint of task_0 of GPUoriginal and its updated memory, shows a different performance.

I checked the model weights at the start of task_1 for GPUoriginal and GPUoriginalckp, and they were the same. All the seeds are set as well and I’m running all the experiments on the same hardware.

Is there anything else I need to consider? as I expect identical results.

I appreciate any help.