KanZa

July 20, 2022, 8:04am

1

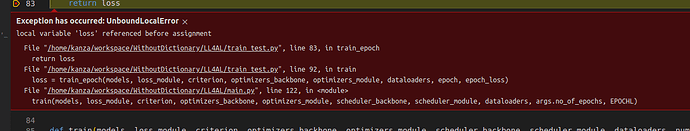

it gives me this error; local variable ‘loss’ referenced before assignment

def train_epoch(models, loss_module, criterion, optimizers_backbone, optimizers_module, dataloaders, epoch, epoch_loss):

loss_module.train()

global iters

for data in tqdm(dataloaders['train'], leave=False, total=len(dataloaders['train'])):

with torch.cuda.device(CUDA_VISIBLE_DEVICES):

inputs = data[0].cuda()

labels = data[1].cuda()

iters += 1

optimizers_backbone.zero_grad()

optimizers_module.zero_grad()

scores, _, features = models(inputs)

target_loss = criterion(scores, labels)

if epoch > epoch_loss:

features[0] = features[0].detach()

features[1] = features[1].detach()

features[2] = features[2].detach()

features[3] = features[3].detach()

pred_loss = loss_module(features)

pred_loss = pred_loss.view(pred_loss.size(0))

m_module_loss = LossPredLoss(pred_loss, target_loss, margin=MARGIN)

m_backbone_loss = torch.sum(target_loss) / target_loss.size(0)

loss = m_backbone_loss + WEIGHT * m_module_loss

loss.backward()

optimizers_backbone.step()

optimizers_module.step()

return loss

thecho7

July 20, 2022, 8:20am

2

Which line occurs the error?return loss, then check out the for loop iterate at least once.

KanZa

July 20, 2022, 8:25am

3

I don’t think so for-loop is running at least once.

thecho7

July 20, 2022, 8:32am

4

It seems dataloaders is invalid.len(dataloaders['train']) then it may returns 0

KanZa

July 20, 2022, 8:40am

5

in Train() where I am called train_epoch(), I have printed the data loaders

DataLoader {‘train’: <torch.utils.data.dataloader.DataLoader object at 0x7f4d756dbc10>, ‘test’: <torch.utils.data.dataloader.DataLoader object at 0x7f4d756dbb20>}

def train(models, loss_module, criterion, optimizers_backbone, optimizers_module, scheduler_backbone, scheduler_module, dataloaders, num_epochs, epoch_loss):

print('>> Train a Model.')

best_acc = 0.

for epoch in range(num_epochs):

best_loss = torch.tensor([0.5]).cuda()

print("DataLoader", dataloaders)

loss = train_epoch(models, loss_module, criterion, optimizers_backbone, optimizers_module, dataloaders, epoch, epoch_loss)

scheduler_backbone.step()

scheduler_module.step()

if False and epoch % 20 == 7:

acc = test(models, epoch, method, dataloaders, mode='test')

# acc = test(models, dataloaders, mc, 'test')

if best_acc < acc:

best_acc = acc

print('Val Acc: {:.3f} \t Best Acc: {:.3f}'.format(acc, best_acc))

print('>> Finished.')

KanZa

July 20, 2022, 8:42am

6

This code works absolutely fine for CIFAR-100 when I tried this for ImageNet, it started giving this error. and now even not working for CIFAR100

Hi, the dataloader you have printed is fine, these are reference to the dataloader objects. Please check the length of dataloaders object, easy way can be

print(len(dataloaders['train']))

# OR

for i, data in enumerate(dataloaders['train']):

pass

print("Length of dataloader:", i)

The training code looks perfect, but your loss variable is defined only into the loop. And the loop runs number of batches present in dataloader.loss variable is left unassigned.

You can initialize the loss variable outside the for loop, but that wont solve the issue. It will just stop throwing the error.

Hope it helps.