Hello everyone,

I’m currently working on a simple ImageNet classifier and I’ve encountered an issue.

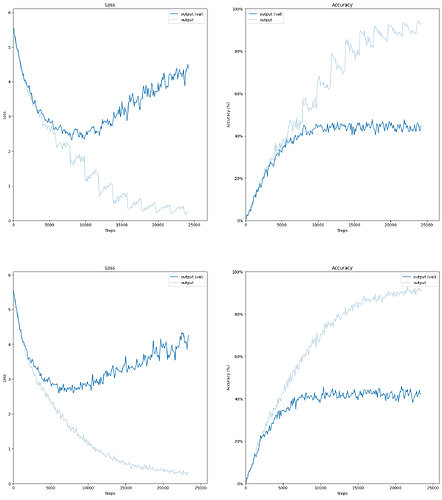

I’ve been following the standard practice of shuffling my data at the beginning of each epoch during the training process, but I’ve noticed some strange spikes in both the loss and accuracy (not in validation).

The strange part is, when I deviate from this standard practice and instead randomly select images with replacement (so no epochs), both my loss and accuracy graphs become stable:

Any help or insights would be greatly appreciated, thank you!