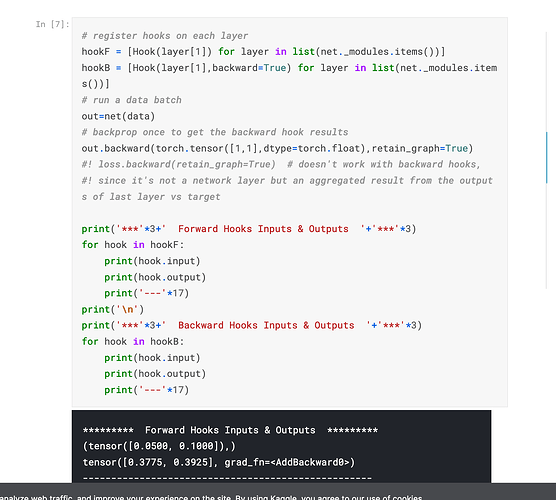

How come Kaggle says here (in the image attached below) that “loss.backward(retain_graph=True) # doesn’t work with backward hooks”? I didn’t quite understand their explanation, especially since lower down they do call loss.backward() (not pictured but is in the article) and it seems to work. I got this from the kaggle article : Understanding Pytorch hooks | Kaggle

Thanks so much!