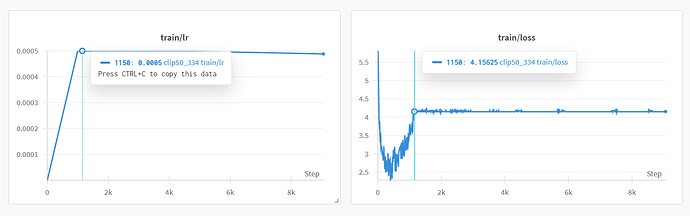

I am training a standard CLIP model with a pretrained resnet. The loss becomes constant after the warmup steps, does anyone have any suggestions as to how to fix this? Thanks!

Hi Eco!

This seems improbably coincidental that your loss flattens out almost exactly when

your learning rate becomes constant at 0.0005. I suspect that something else is also

happening when you switch learning rates.

What happens if you warm up for 500 or 2000 steps instead of 1000 or if you have your

constant learning-rate value be 0.0003 or 0.0007 instead of 0.0005?

Best.

K. Frank