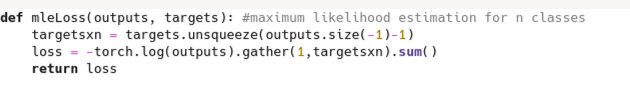

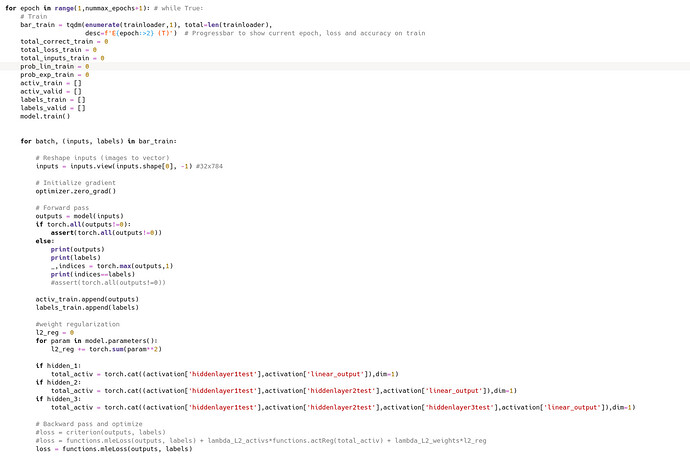

Hi!. I defined a loss function (mleLoss; I have attached a snapshot of it) to be equivalent to CrossEntropyLoss() (the reason of this is not important right now), but during training I get loss=nan. I thought that maybe this was because at some point the loss function takes one 0 and because we are doing -log(p_i) if p_i=0 then the loss function diverges. However when a see the outputs (softmax probabilities) when loss=nan the maximum probabilities correspond to their labels, so I do not see why it happens loss=nan. Furthermore, when I use CrossEntropyLoss() as my loss function I do not get loss=nan, what leads me to think that the problem is in the definition of the loss function mleLoss(), but to me my loss function and CrossEntropyLoss() are identical and I do not see where is the error. Thanks in advance!!