Hello!

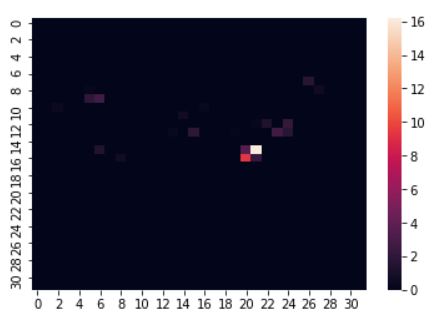

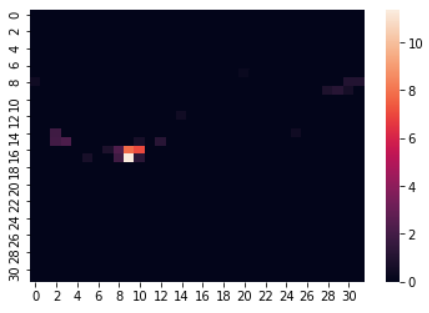

I am having troubles with building a convolutional autoencoder. The main issue is that all weights are learned to be equal to zero really quickly. This is reasonable, due to the fact that the images that I’m using are very sparse. Two examples can be seen below (it’s actually just a 2D tensor, but I’m showing it here as a heatmap):

The question is, what kind of loss function would be good for such case? L1 is bad, because my network settles in wrong minima (just multiplies the input by zero). Intuitively, I wish to penalize false negatives, they are of bigger importance for my case than false positives. Also I’ve tried variety of different autoencoders, none of which work. I’ve also used my network to train MNIST/CIFAR10 and it works well enough. Thanks in advance!