Hi,

Apologies if this seems like a noob question; I’ve read similar issues and their responses and looked at all the related examples.

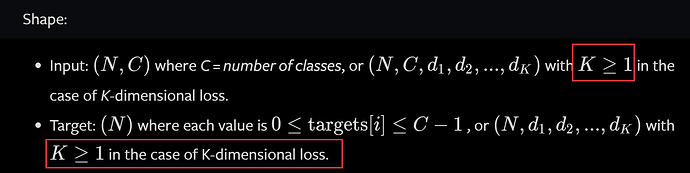

I’m really confused about what the expected predicted and ideal arguments are for the loss functions. I’m building a CNN for image classification and there are 4 possible classes.

Trying to use nn.CrossEntropyLoss I get errors:

RuntimeError: multi-target not supported at ClassNLLCriterion.cu:16

The batch size is 10, and the labels are 4. So the outputs tensor is 10 x 4, and so is the labels tensor.

The code is (minified):

criterion = nn.CrossEntropyLoss().cuda()

print("using CE loss")

optimizer = torch.optim.SGD(model.parameters(),

lr=learning_rate,

momentum=0.9,

dampening=0,

weight_decay=l2_reg,

nesterov=False)

model.train()

total_step = len(train_loader)

for epoch in range(num_epochs):

for i, (images, labels) in enumerate(train_loader):

inputs = images.cuda().half()

labels = labels.cuda().long()

optimizer.zero_grad()

outputs = model(inputs)

print(labels.size())

print(outputs.size())

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

I understand that the criterion expects to see a 1D tensor, and so I’ve tried reducing it:

print(labels.size())

ideals = labels.view(-1)

print(ideals.size())

loss = criterion(outputs, ideals)

So now the sizes are:

labels: (10, 4)

ideals: (40,)

And now I get the error of:

ValueError: Expected input batch_size (10) to match target batch_size (40)

Which makes sense. So what I don’t get is:

- do I need to iterate the ideal and actual output for each input in the batch?

- or do I need to reduce both tensors to the same size and dimensions?

Most of the examples seems to be using directly

loss = criterion(outputs, labels)

And the only exception I’ve seen is at the official documentation (https://pytorch.org/tutorials/beginner/blitz/cifar10_tutorial.html#training-on-gpu) where the labels are:

_, predicted = torch.max(outputs, 1)

But sadly I don’t see an explanation?

Many thanks!

Alex