Hi there!

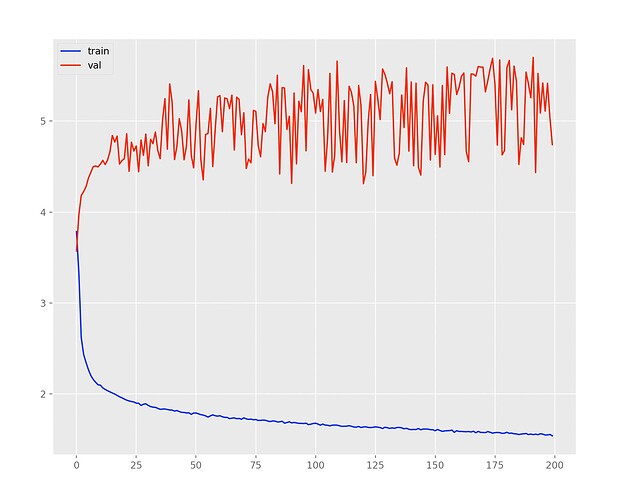

I am working on an image to text seq2seq model, where am using CNN as an encoder and LSTM as a decoder. I am facing one issue that I tried to solve but couldn’t get any success. While running my model, after a few epochs my training loss either gets saturated or the rate of change becomes really less. It takes almost 100 epochs to go from ~1.68 to ~1.55 (training loss). And these loss numbers are still too large to deal with. While on the other hand, my validation loss increases and kind of gets saturated or will be fluctuating in a particular range, as shown in the figure.

I tried the following things to improve the model’s performance but nothing worked:

- I increased the size of the dataset from 50K to 100K

- I preprocessed it differently to reduce the output dimensionality i.e. size of the vocab. The current size is ~630 tokens. (I am using torchtext and bucketiterator for preprocessing.)

- increase and decrease the size of both encoder and decoder. Tried adding and removing some layers from CNN and LSTM both.

- Tried changing the hidden size, and learning rate but nothing worked.

- Tested for a higher number of epochs.

- As I am using cross-entropy loss, I tried initializing weights at the beginning of each and every batch.

- changed my training style from epoch to step training.

- even tried using different datasets, the resulting plot had the same behavior just with a different magnitude.

May I request you to please help me? Any suggestions will be appreciated!

Thank you!