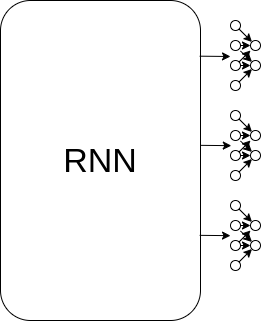

Hi all, i have a network composed by a RNN layer , followed by 3 separated fully connected portions that output each a single value:

The net is defined by :

class RNN(nn.Module):

def __init__(self, hidden_size, num_layers,fd_n):

super(RNN, self).__init__()

self.hidden_size = hidden_size

self.num_layers = num_layers

self.rnn = nn.RNN(input_size=510,hidden_size=hidden_size,num_layers=num_layers,batch_first=True,dropout=0.0)

self.fcx = nn.Linear(in_features=hidden_size,out_features=fd_n)

self.fcx2 = nn.Linear(in_features=fd_n,out_features=1)

self.fcy = nn.Linear(in_features=hidden_size,out_features=fd_n)

self.fcy2 = nn.Linear(in_features=fd_n,out_features=1)

self.fcw = nn.Linear(in_features=hidden_size,out_features=fd_n)

self.fcw2 = nn.Linear(in_features=fd_n,out_features=1)

def forward(self,x):

h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)

out_rnn, _ = self.rnn(x,h0)

outx = self.fcx(F.relu(out_rnn[:, -1, :]))

outx = self.fcx2(F.relu(outx))

outy = self.fcy(F.relu(out_rnn[:, -1, :]))

outy = self.fcy2(F.relu(outy))

outw = self.fcw(F.relu(out_rnn[:, -1, :]))

outw = self.fcw2(F.relu(outw))

return outx,outy,outw

while the training part is:

outputs_x, outputs_y, outputs_w = model(inputs)

loss_x = criterion_x(outputs_x, labels)

loss_y = criterion_y(outputs_y, labels)

loss_w = criterion_w(outputs_w, labels)

loss = loss_x + loss_y + loss_w

optimizer.zero_grad()

loss.backward()

optimizer.step()

At the beginning I always get :

UserWarning: Using a target size (torch.Size([1520, 3])) that is different to the input size (torch.Size([1520, 1])). This will likely lead to incorrect results due to broadcasting. Please ensure they have the same size.

am I correctly backpropagating ?

Thanks