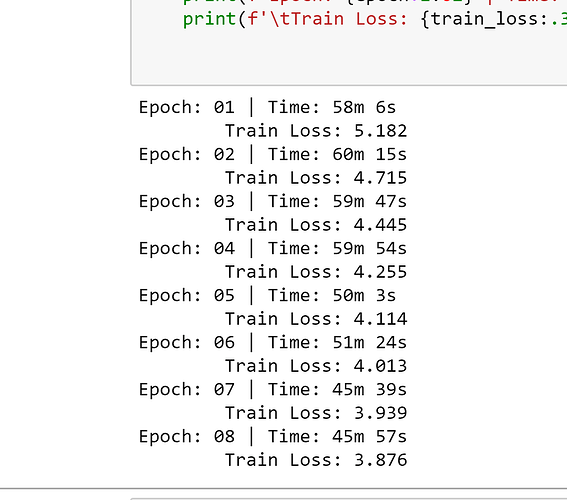

I am experiencing this weird model behavior. I am training a video captioning model. The training loss for epochs decreases for each epoch during the first 8 epochs. When I load the model again from the last checkpoint to train again for 4 epochs, the loss is greater than the last recorded loss and does not drop in subsequent epochs.

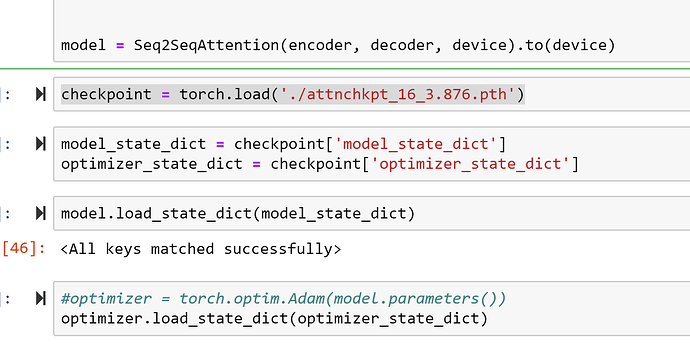

I load my model in this way

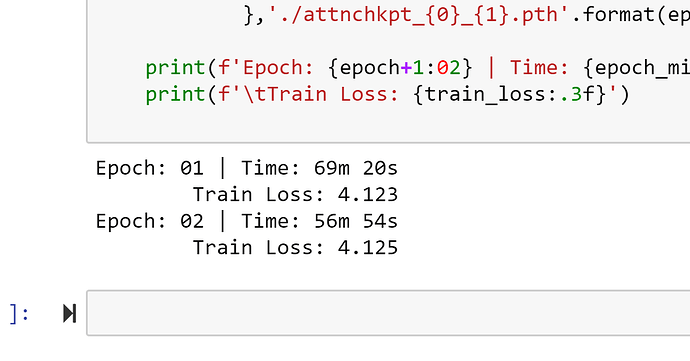

The loss on retraining

Is this common? What are the reasons for it and how can I improve/avoid this?

Thank you.