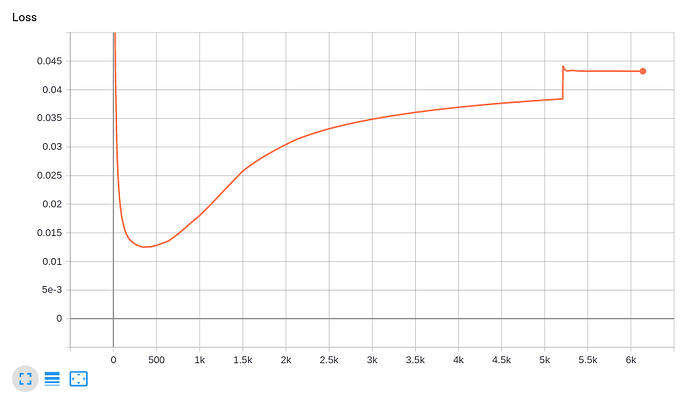

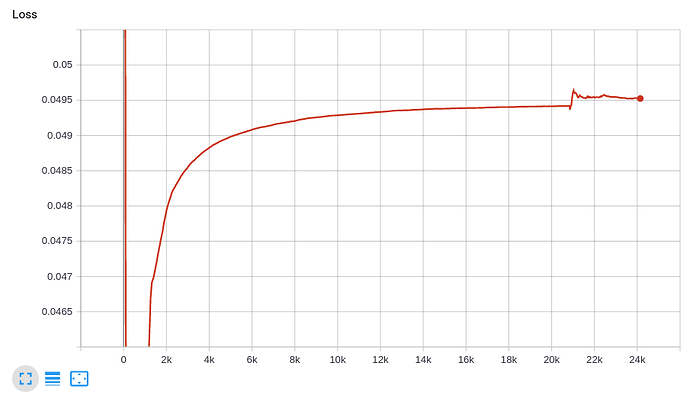

So I am training a set of videos over two models. And I am logging my loss after each epoch. Both of the models are performing poorly wrt. loss. During my first epoch, the loss first dips and then increase and in the next epoch, it takes a step up. What can be the possible reasons? I am using Adam Optimizer and its multi-label classification.

Adjusting learning rate and/or gradient clipping might help

Hey, thank you. I believe that using Adam should handle the adjusting learning rate part right?

I will apply gradient clipping and see what happens.

As @jubick said the first thing that comes to mind is lowering the learning rate. What is your current learning rate? Adam doesn’t adjust the learning rate, for that you would need to use a learning rate scheduler. Example of how to set the learning rate for Adam:

optimizer = torch.optim.Adam(model.parameters(), lr=1e-3)

Share a code snippet and it’ll help debugging what could be wrong in your code

Okay so I left it at the default LR that Adam provides. I was earlier using Adadelta and switched to Adam because it adds an additional momemtum term and is more stable.

This is the optimizer snippet. The whole point is to skip using a scheduler.

optimizer = Adam(parameters, weight_decay=opt.weight_decay)

What do you mean with the point is to skip using a scheduler?

I would since it’s diverging in your case still try and lower the learning rate below the default value and see if that changes anything.

Edit: if that doesn’t help then I think some code would be helpful to further debug

Feel the same of AladdinPerzon said.

I would lower the lr to approx. 1e-5 to 1e-4 to see what happened.

And another thing I will do is to disable the weight decay since it may cause the training harder.

So after suggestions, all of you, I am thinking about adding a scheduler with starting LR as 0.0001. However, I am skeptical about weight decay. My dataset is highly skewed, so i actually want my training to be difficult so that I dont fit quickly.