Hi everyone,

I assigned different weight_decay for the parameters, and the training loss and testing loss were all nan.

I printed the prediction_train,loss_train,running_loss_train,prediction_test,loss_test,and running_loss_test ,they were all nan.

And I have checked the data with numpy.any(numpy.isnan(dataset)), it returned False.

If I use optimizer = torch.optim.Adam(wnn.parameters()) rather than assigning different weight_decay for the parameters, there would be no problem.

Could you please tell me how to fix it? Here are the codes, I defined the activation function by myself. Thank you:)

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import Dataset, DataLoader

from torch.utils.data import TensorDataset

%matplotlib inline

data_temp=pd.read_csv('D:/Desktop/SEU/graduation/project/data/2017/processed4code/03.csv')

dataset=np.array(data_temp)

#np.any(np.isnan(dataset))

dataset_norm = (dataset - dataset.min()) / (dataset.max() - dataset.min())

data_df=pd.DataFrame(data=dataset_norm)

train_size=int(len(data_df)*0.75)

test_size=len(data_df)-train_size

train_set=pd.DataFrame(data=data_df.iloc[:train_size,:])

test_set=pd.DataFrame(data=data_df.iloc[train_size:,:])

train_set_x=pd.DataFrame(data=train_set.iloc[:,0:30])

train_set_y=pd.DataFrame(data=train_set.iloc[:,30])

test_set_x=pd.DataFrame(data=test_set.iloc[:,0:30])

test_set_y=pd.DataFrame(data=test_set.iloc[:,30])

train_set_x_array = np.array(train_set_x)

train_set_y_array=np.array(train_set_y)

test_set_x_array=np.array(test_set_x)

test_set_y_array=np.array(test_set_y)

train_set_x_tensor = torch.from_numpy(train_set_x_array).float()

train_set_y_tensor=torch.from_numpy(train_set_y_array).float()

test_set_x_tensor=torch.from_numpy(test_set_x_array).float()

test_set_y_tensor=torch.from_numpy(test_set_y_array).float()

train=TensorDataset(train_set_x_tensor,train_set_y_tensor)

test=TensorDataset(test_set_x_tensor,test_set_y_tensor)

trainloader = DataLoader(train, batch_size=10, shuffle=True)

testloader=DataLoader(test,batch_size=10,shuffle=False)

#My own activation function

class Morlet(nn.Module):

def __init__(self):

super(Morlet,self).__init__()

def forward(self,x):

x=(torch.cos(1.75*x))*(torch.exp(-0.5*x*x))

return x

morlet=Morlet()

class WNN(nn.Module):

def __init__(self):

super(WNN,self).__init__()

self.a1=torch.nn.Parameter(torch.randn(64,requires_grad=True))

self.b1=torch.nn.Parameter(torch.randn(64,requires_grad=True))

self.layer1=nn.Linear(30,64,bias=False)

self.out=nn.Linear(64,1)

def forward(self,x):

x=self.layer1(x)

x=(x-self.b1)/self.a1

x=morlet(x)

out=self.out(x)

return out

wnn=WNN()

optimizer = torch.optim.Adam([{'params': wnn.layer1.weight, 'weight_decay':0.01},

{'params': wnn.out.weight, 'weight_decay':0.01},

{'params': wnn.out.bias, 'weight_decay':0},

{'params': wnn.a1, 'weight_decay':0.01},

{'params': wnn.b1, 'weight_decay':0.01}])

criterion = nn.MSELoss()

for epoch in range(10):

prediction_test_list=[]

running_loss_train=0

running_loss_test=0

for i,(x1,y1) in enumerate(trainloader):

prediction_train=wnn(x1)

#print(prediction_train)

loss_train=criterion(prediction_train,y1)

#print(loss_train)

optimizer.zero_grad()

loss_train.backward()

optimizer.step()

running_loss_train+=loss_train.item()

#print(running_loss_train)

tr_loss=running_loss_train/train_set_y_array.shape[0]

for i,(x2,y2) in enumerate(testloader):

prediction_test=wnn(x2)

#print(prediction_test)

loss_test=criterion(prediction_test,y2)

#print(loss_test)

running_loss_test+=loss_test.item()

print(running_loss_test)

prediction_test_list.append(prediction_test.detach().cpu())

ts_loss=running_loss_test/test_set_y_array.shape[0]

print('Epoch {} Train Loss:{}, Test Loss:{}'.format(epoch+1,tr_loss,ts_loss))

test_set_y_array_plot=test_set_y_array*(dataset.max()-dataset.min())+dataset.min()

prediction_test_np=torch.cat(prediction_test_list).numpy()

prediction_test_plot=prediction_test_np*(dataset.max()-dataset.min())+dataset.min()

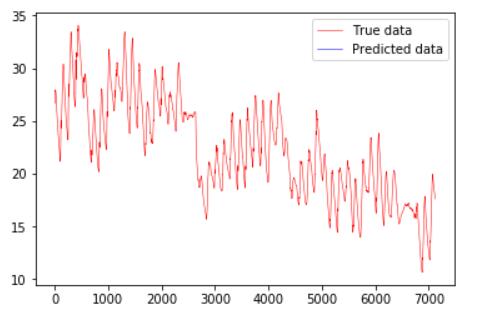

plt.plot(test_set_y_array_plot.flatten(),'r-',linewidth=0.5,label='True data')

plt.plot(prediction_test_plot,'b-',linewidth=0.5,label='Predicted data')

plt.legend()

plt.show()

print('Finish training')

The output was:

Epoch 1 Train Loss:nan, Test Loss:nan

And there was no predicted data on the plot.