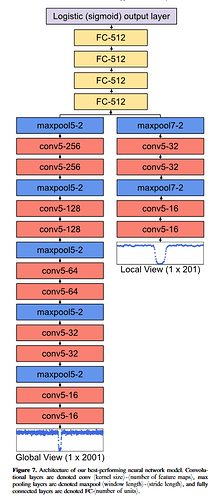

Hello, I’m trying to implement only the left (long) branch of the CNN in this paper: https://iopscience.iop.org/article/10.3847/1538-3881/aa9e09/pdf Image below for quick reference:

It is a CNN to detect transiting exoplanets from lightcurves, lightcurves are 1D tensors whose values oscillate more or less around 1 and at some point decrease and then increase back to around one (this represents an exoplanet passing in front of the star decreasing its brightness).

My model looks like this:

import torch

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv1d(1, 16, 5)

self.conv2 = nn.Conv1d(16, 16, 5)

self.conv3 = nn.Conv1d(16, 32, 5)

self.conv4 = nn.Conv1d(32, 32, 5)

self.conv5 = nn.Conv1d(32, 64, 5)

self.conv6 = nn.Conv1d(64, 64, 5)

self.conv7 = nn.Conv1d(64, 128, 5)

self.conv8 = nn.Conv1d(128, 128, 5)

self.conv9 = nn.Conv1d(128, 256, 5)

self.conv10 = nn.Conv1d(256, 256, 5)

self.pool = nn.MaxPool1d(5, 2)

self.fc1 = nn.Linear(20736, 512)

self.fc2 = nn.Linear(512, 512)

self.fc3 = nn.Linear(512, 2)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x = self.pool(F.relu(self.conv2(F.relu(self.conv1(x)))))

x = self.pool(F.relu(self.conv4(F.relu(self.conv3(x)))))

x = self.pool(F.relu(self.conv6(F.relu(self.conv5(x)))))

x = self.pool(F.relu(self.conv8(F.relu(self.conv7(x)))))

x = self.pool(F.relu(self.conv10(F.relu(self.conv9(x)))))

x = torch.flatten(x, 1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = F.relu(self.fc2(x))

x = F.relu(self.fc3(x))

return x

net = Net()

Training loop:

from torch import autograd

for epoch in range(3): # loop over the dataset multiple times

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the inputs; data is a list of [inputs, labels]

with autograd.detect_anomaly():

inputs, labels = data

inputs = inputs.unsqueeze(1)

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs.float())

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

print(running_loss)

if i % 2000 == 1999: # print every 2000 mini-batches

print(f'[{epoch + 1}, {i + 1:5d}] loss: {running_loss / 2000:.3f}')

running_loss = 0.0

print('Finished Training')

I’m using Cross-Entropy loss and Adam with usual hyperparameters values (lr=0.00001, betas=(0.9, 0.999), eps=1e-08).

No matter what I try losses are almost the same in each epoch, getting worse with each batch, output example:

0.692689061164856

1.3858782649040222

2.0809112191200256

2.775941848754883

0.6942305564880371

1.388343870639801

2.0808927416801453

2.7744598984718323

0.693331778049469

1.3866432905197144

2.0802061557769775

2.773610234260559

Finished Training

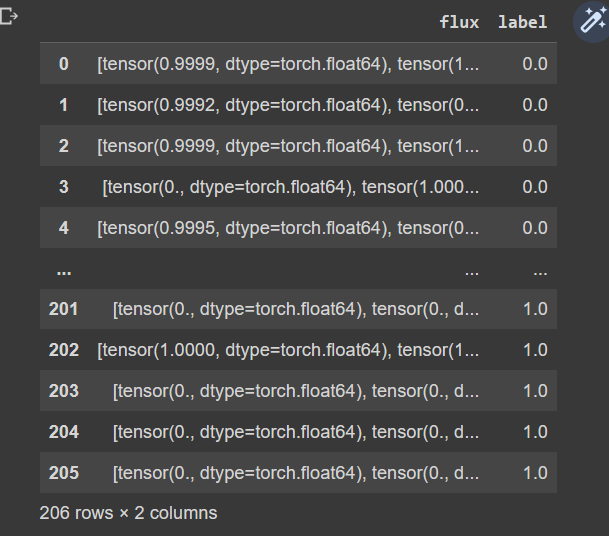

Things I’ve tried: reduce lr and play around with hyperparameters, all nans removed from inputs, inputs normalized with nn.functional. normalize, input tensors padded with zeros when sizes differ (this probably would cause accuracy issues since I’m introducing a fake signal but I think it would not explain the static loss). I have not tried yet to increase the number of samples (using 206, have 3k) since I would have to extract and preprocess them and I’d say number of samples is not the main issue here.

Thank you for any help for making the model work or any other advice.