Dear community,

I am trying to fine tune T5 for question answering on the SROIE (textlines of receipts) dataset but the loss is not improving. Can you offer a suggestion? My text is OCR-extracted textlines from receipts which have bounding box coordinates and free text. My questions are to extract the company where the purchase was made and the total amount spent. The label (e.g. Total) and value ($10) are not necessarily in the same text line/not necessarily adjacent in the concatenated text. I believe, the model should be able to use the bounding box coordinates to interpret relationships between textlines. Is this a correct assumption?

My prompt is like this:

‘Context: 68,266,689,266,689,303,68,303,PANDAH INDAH PULAU KETAM RESTAURANT\n104,310,667,310,667,347,104,347,M4-A-8, JALAN PANDAH INDAH … \n\n\nQuestion: What is the total amount spent?\n\nAnswer:’

tokens_prompt_total = self.tokenizer(prompt_total,

padding="max_length",

max_length=3000)

My label is like this:

‘PANDAH INDAH PULAU KETAM RESTAURANT’

tokens_labels_company = self.tokenizer(labels_dict['company'], padding="max_length",

max_length=25).input_ids

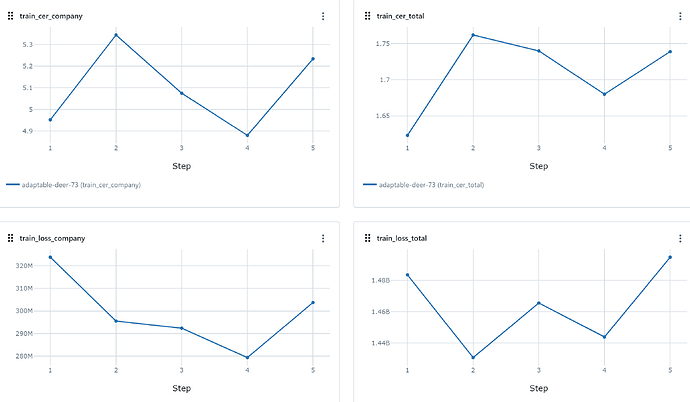

My loss is calculated like this, but the value of it is very high and doesn’t decrease.

label_tokens_for_loss_calc = torch.stack(labels_tokens, dim=1).type(torch.FloatTensor).to('cuda')

output_tokens_for_loss_calc = outputs.type(torch.FloatTensor).to('cuda')

output_tokens_for_loss_calc = F.pad(output_tokens_for_loss_calc, (1,

label_tokens_for_loss_calc.shape[1] -

output_tokens_for_loss_calc.shape[1] - 1))

So far, I am trying to fine tune the whole model without freezing since bounding box comprehension is somewhat unique to this task, but could try freezing or using another model. Greatly appreciate your suggestions!