Yeah, it doesn’t look that good. You could try to train more layers or lower your learning rate from the beginning to something like 1e-4 or even 1e-5.

OK, so, this is a problem.

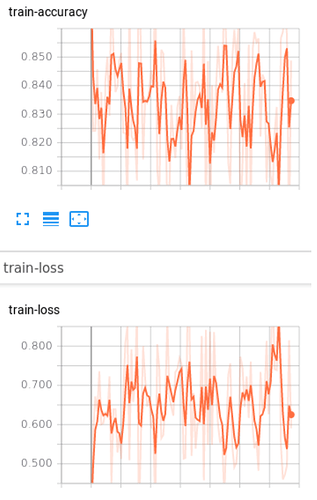

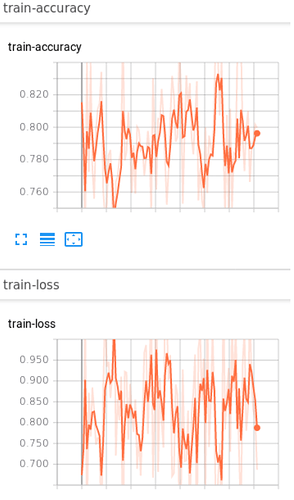

These are my results using a 1e-5 learning rate, finetuning only the last layer:

These are my results with 1e-5 learning rate and finetuning the last three layers:

Both don’t overfit! Something is not working in my model

try to add clip_grad_norm_(). Update in your code:

loss.backward()

clip_grad_norm_(model.parameters(), max_norm=10)

optimizer.step()

And I would suggest you to refer my post Efficient train/dev sets evaluation. I think it might help you.

I assume your batch size is also 10, so that each iteration is equal to one epoch?

Are you still using the SegNet implementation from the first post or did you change your code in the meantime?

Could you provide the current code with weights, so that I could run it on my machine?

Also, you could try @roaffix’s suggestion, although I think with a real low learning rate, this shouldn’t be an issue, but it’s definitely worth a try.

I’m still using that Segnet implementation and I just fixed the problem.

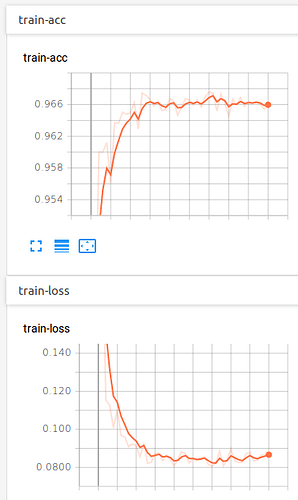

I changed the learning rate for every layer: previous results were obtained with all freezed layers except the last one, now every layer is slowing learning and the last one is a bit faster.

This is how I did it:

# finetuning

ftune_params = list(map(id, model.conv11d.parameters()))

base_params = filter(lambda p: id(p) not in ftune_params,

model.parameters())

new_params = filter(lambda p: id(p) in ftune_params,

model.parameters())

# optimizer

optimizer = torch.optim.SGD([{'params': base_params},

{'params': new_params, 'lr': 0.001}

], lr=0.0001, momentum=0.9)

exp_lr_scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=15, gamma=0.1)

These are the results:

I will also try @roaffix suggestion!

This is actually pretty simple! I just followed this article, really straightforward.

Let me know if you need some more help.

I am glad that you found my article on “PyTorch Hack – Use TensorBoard for plotting Training Accuracy and Loss” helpful. Thanks for visiting my blog and citing it. Happy Coding