I’m using torchvision 0.3.

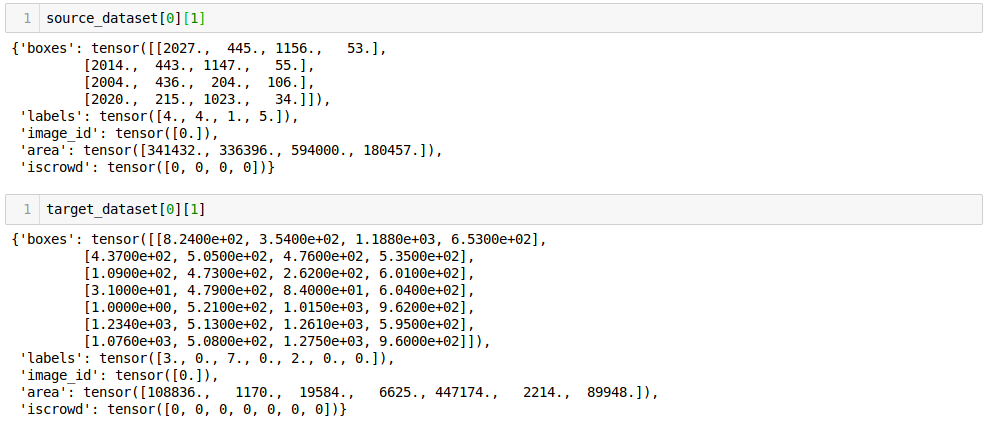

Here’s an example from both datasets I’m using:

Here’s my model :

def get_model(num_classes):

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

in_features = model.roi_heads.box_predictor.cls_score.in_features

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes) # replace the pre-trained head with a new one

return model.cuda()

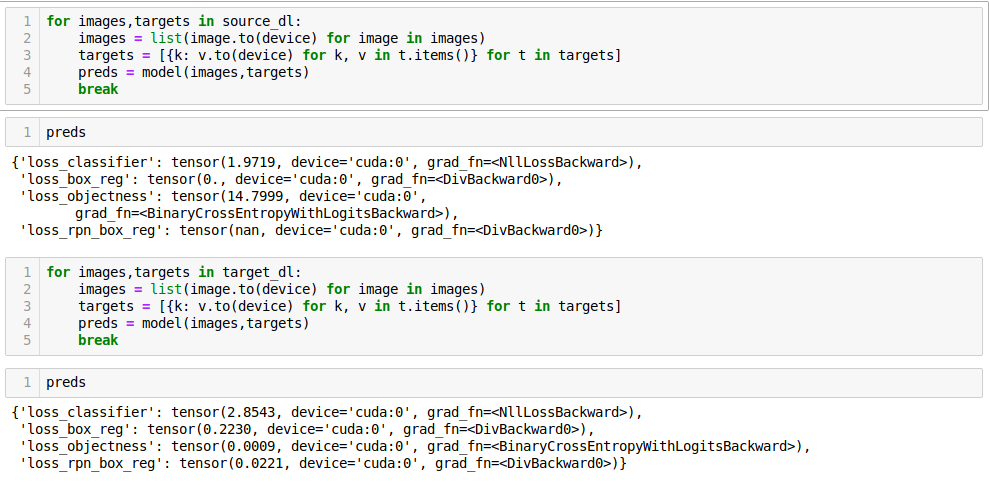

I’ve made both the dataset classes identical as per torchvision docs. I’ve seen the same behaviour when I train on high res images for target_dataset also after some epochs. What can done to deal with this ? What can be the reason for getting ‘nan’ for the first dataset in first run?

I’ve tried lowering lr, normalizing images but still the same issue persists.