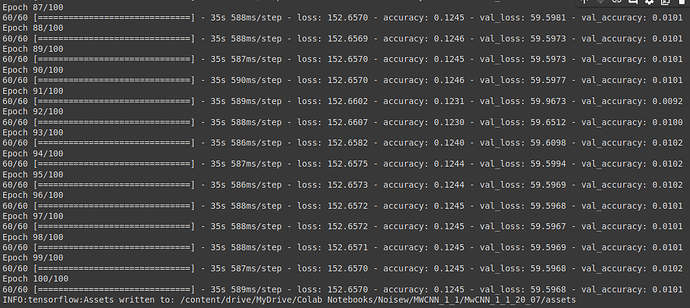

I’m using a Unet architecture. During training the loss value is the same.

Dataset: 4000 sample from 160 augmented images

I changed the learning rate many time from 0.1 to 0.000001 but the result is the same.

Try to overfit a small dataset (e.g. just 10 samples) by playing around with some hyperparameters.

Once this is done try to scale up the use case again.

1 Like

Hi thanks for your suggestion. I tried with a couple of combination of parameters (optimizer, learning rate, patch size, dropout, and batch normalization) but still get the same results. the problem was solved due to the normalization of data images

So did you go through the entire dataset to pick up the values for normalization? i guess the normalization you are talking about is done using torchvision transforms.

I just normalize all dataset. I have a 8 bit images, so

newData = oldData / 255

ok thanks for the answer