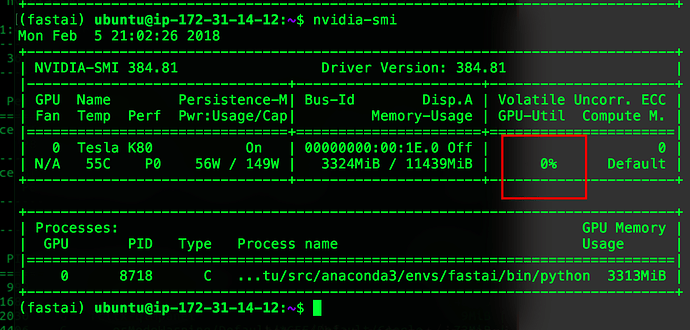

Is it normal for the GPU load to only be 20% with CUDA? I have torch.backends.cudnn.benchmark=True as well with no performance increase.

My model is defined as follows:

class LSTM(torch.nn.Module):

def __init__(self, N, D_in, H, H_LSTM, D_out):

super(LSTM, self).__init__()

self.H = H

self.in2lstm = torch.nn.Linear(D_in, H)

self.lstm = torch.nn.LSTM(H, H_LSTM, dropout=0.5)

self.lstm2out = torch.nn.Linear(H_LSTM, D_out)

def forward(self, x, hidden, t, seq_len):

y_pred = self.in2lstm(x[t:t+seq_len].view(seq_len, -1))

y_pred = torch.nn.ELU()(y_pred)

y_pred, hidden = self.lstm(y_pred.view(seq_len, 1, self.H), hidden)

y_pred = self.lstm2out(y_pred.view(seq_len, -1))

return y_pred, hidden

model = LSTM(N, D_in, H, H_LSTM, D_out).cuda()