Hello Team, i using Libtorch with CUDA support. I get lowest performance on transferring memory to CUDA operation. It cost about 2.60s.

tensor_image = tensor_image.to(torch::kCUDA);

How can i improve this operation, check it or profile?

clock_t tROIset = clock();

cv::Rect myROI(30, 10, 400, 400);

printf("ROI set.\n Time taken: %.2fs\n", (double)(clock() - tROIset)/CLOCKS_PER_SEC);

clock_t tLoadOpenCV = clock();

cv::Mat img = cv::imread("test_data/image.png"); // 600x900

printf("loader of Tensor.\n Time taken: %.2fs\n", (double)(clock() - tLoadOpenCV)/CLOCKS_PER_SEC);

clock_t tTester = clock();

tensor_image = tensor_image.to(torch::kCUDA);

printf("CPU-GPU transfer.\n Time taken: %.2fs\n", (double)(clock() - tTester)/CLOCKS_PER_SEC);

Result of execution:

ROI set in Tensor . Time taken: 0.00s

loader of Tensor. Time taken: 0.01s

CPU-GPU Tensor transfer. Time taken: 2.60s

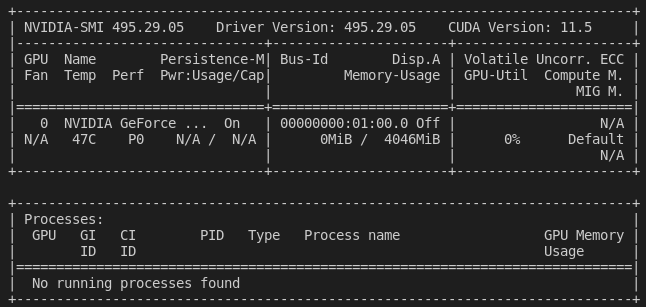

PyTorch version: 1.10.0

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2019 NVIDIA Corporation

Built on Wed_Oct_23_19:24:38_PDT_2019

Cuda compilation tools, release 10.2, V10.2.89