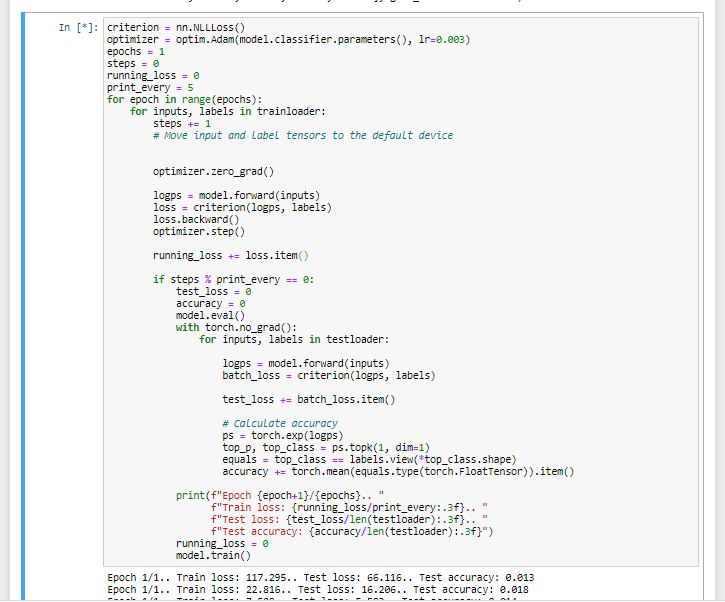

This is a multi-class classification of 224x224 images, I am using VGG16 and only training the classifier.

While the losses decrease, the test accuracy stays almost constant at low vlaues-

I think there’s a few mistakes in your code. Where’s the validation? Is that what you call test?

Try to emulate this:

https://pytorch.org/tutorials/beginner/pytorch_with_examples.html

The loss seems to be going down nicely but there seems to be something wrong with the accuracy. Could you check that training accuracy actually increases first?

Also perhaps just to structure the code a little nicer you could use something like this (I have a little difficult time reading your code now)

def check_accuracy(loader, model):

num_correct = 0

num_samples = 0

model.eval()

with torch.no_grad():

for x, y in loader:

x = x.to(device=device)

y = y.to(device=device)

scores = model(x)

_, predictions = scores.max(1)

num_correct += (predictions == y).sum()

num_samples += predictions.size(0)

print(f'Got {num_correct} / {num_samples} with accuracy {float(num_correct)/float(num_samples)*100:.2f}')

model.train()

Edit: Included model.train() at end of code.